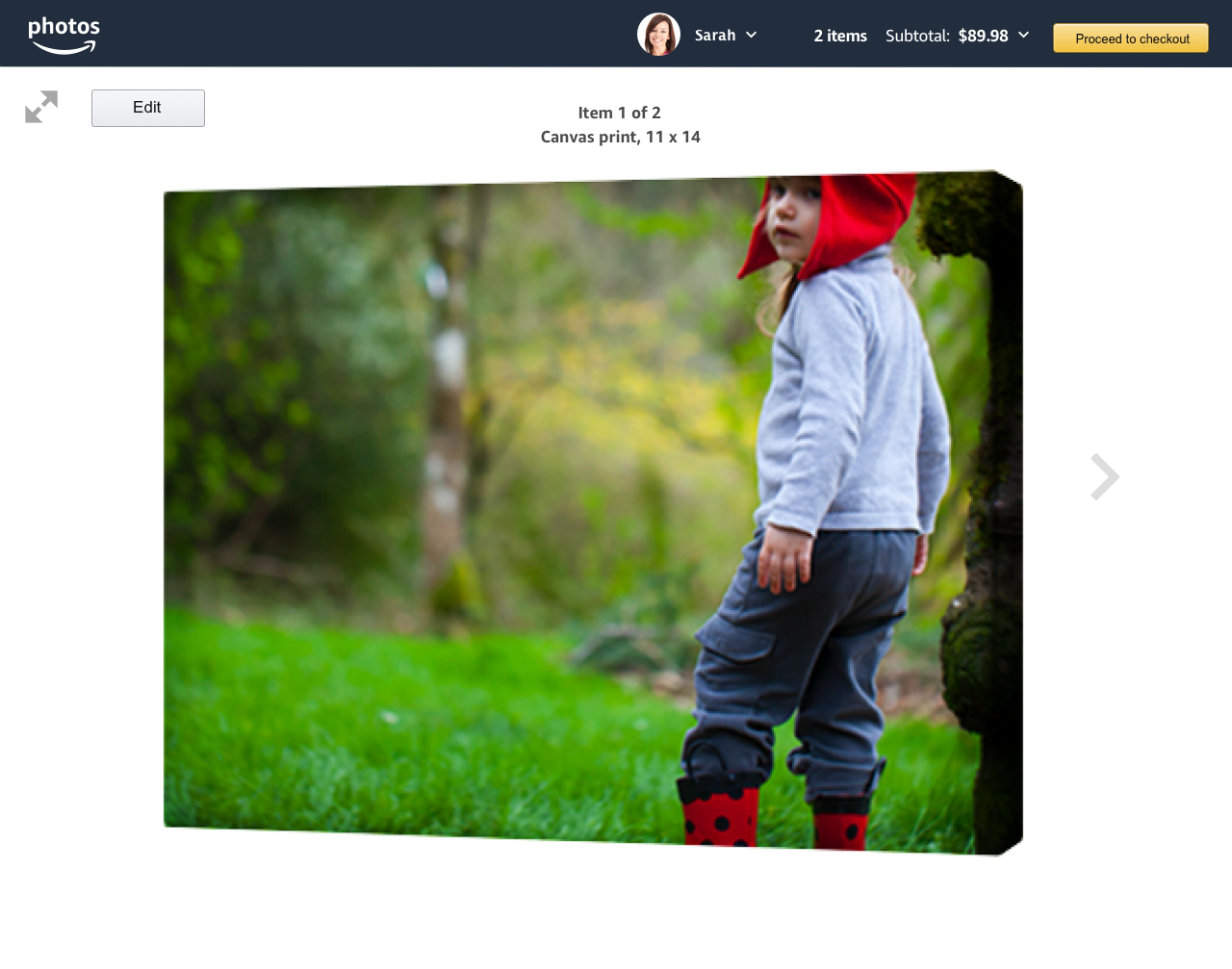

Amazon prints

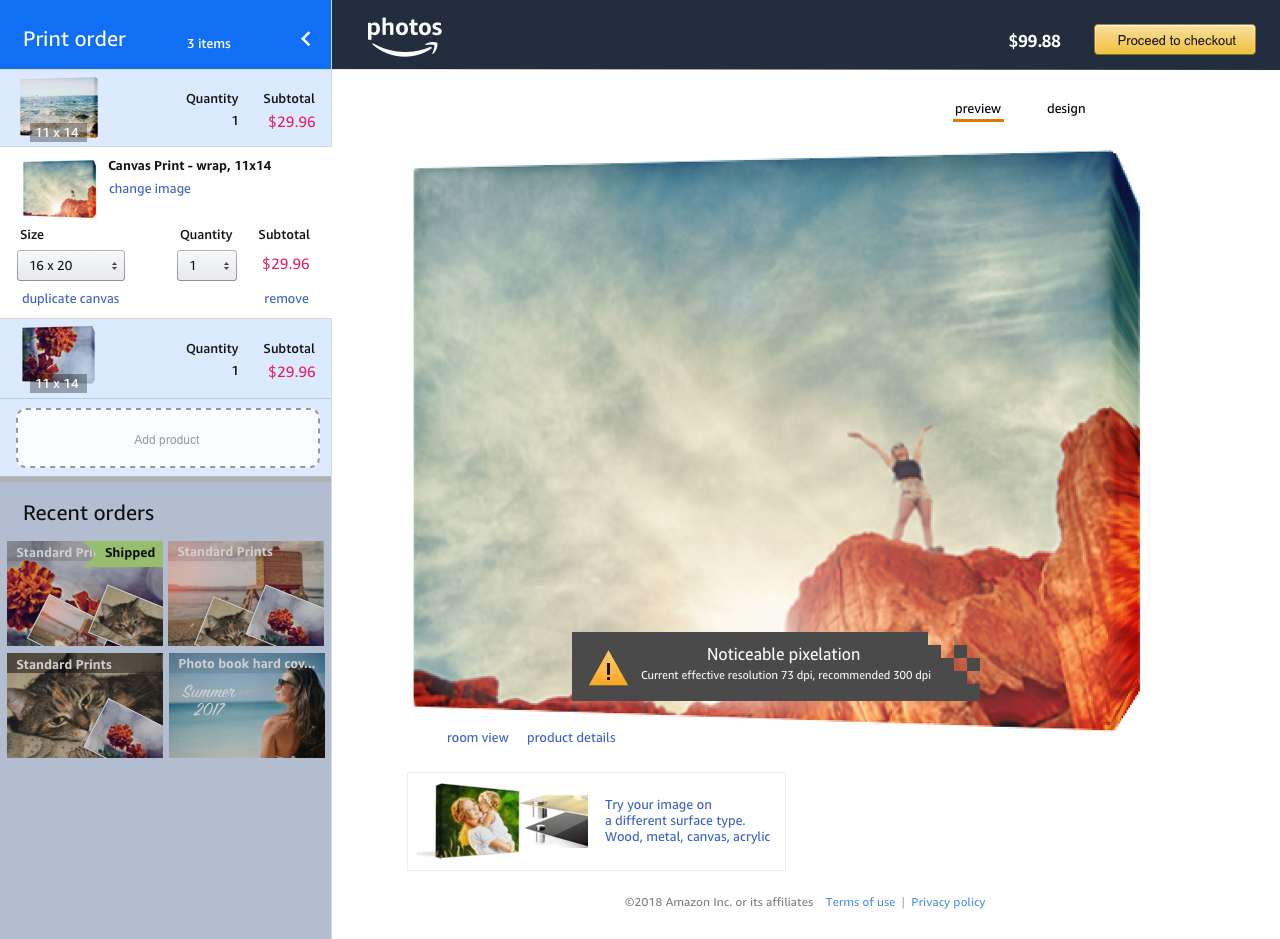

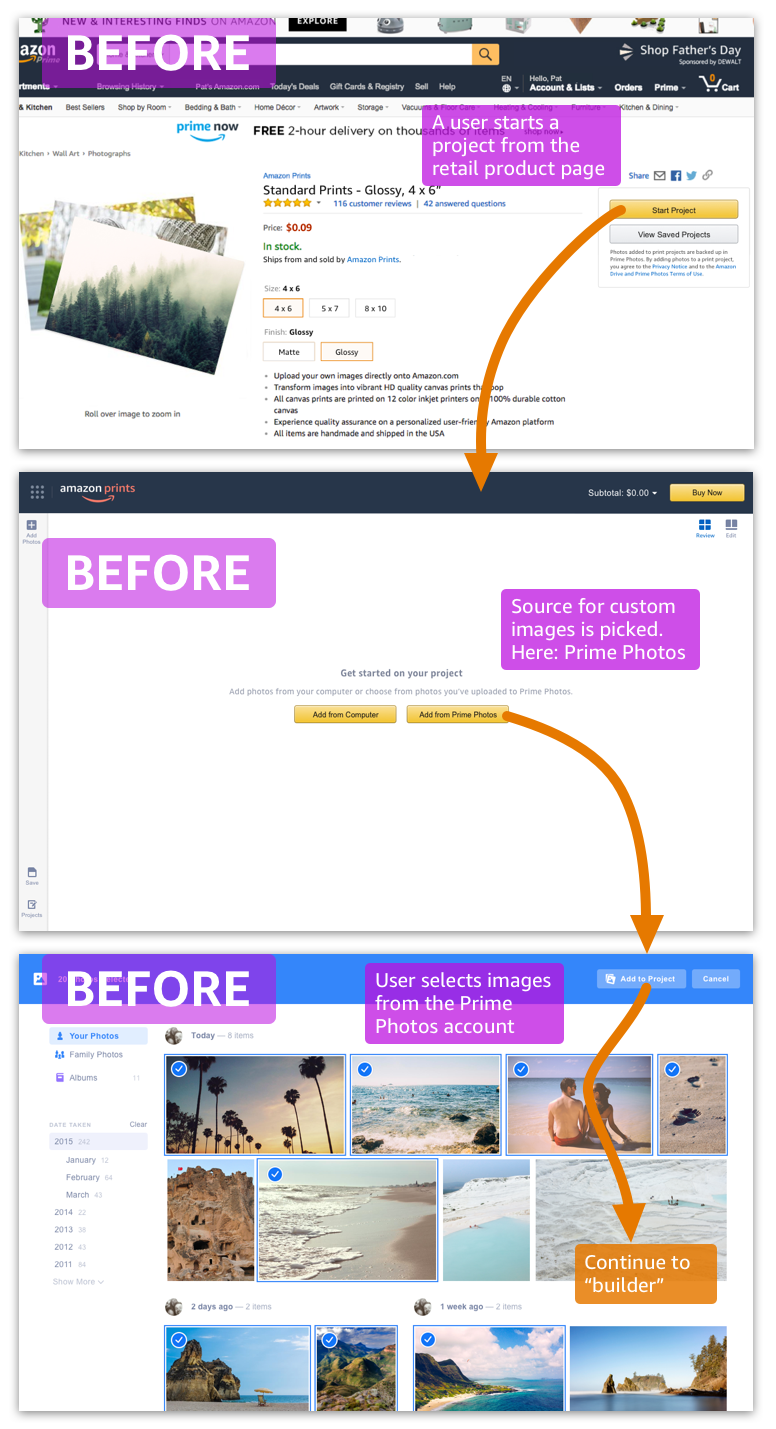

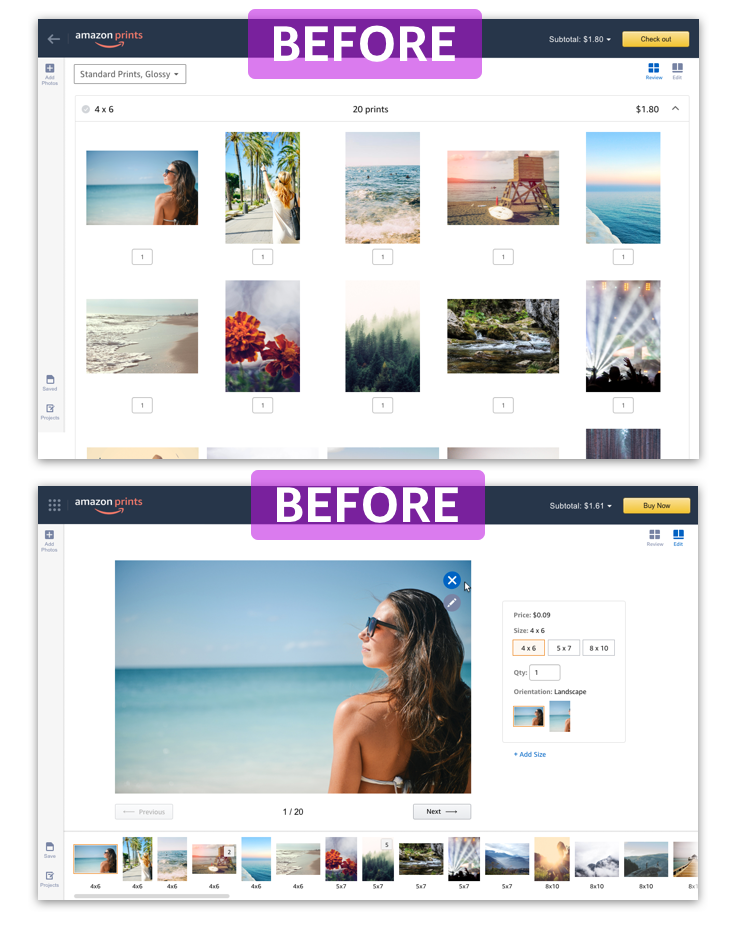

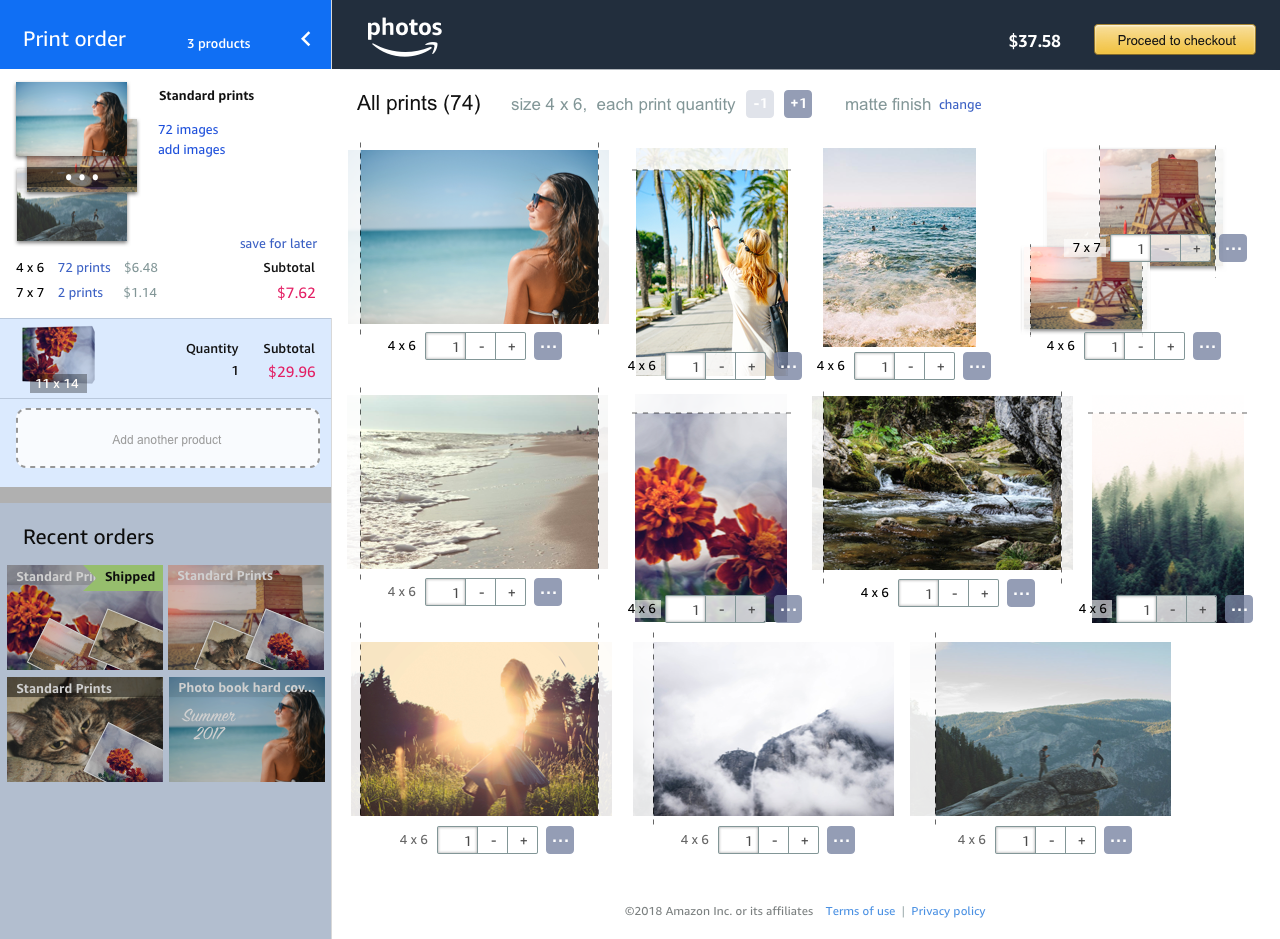

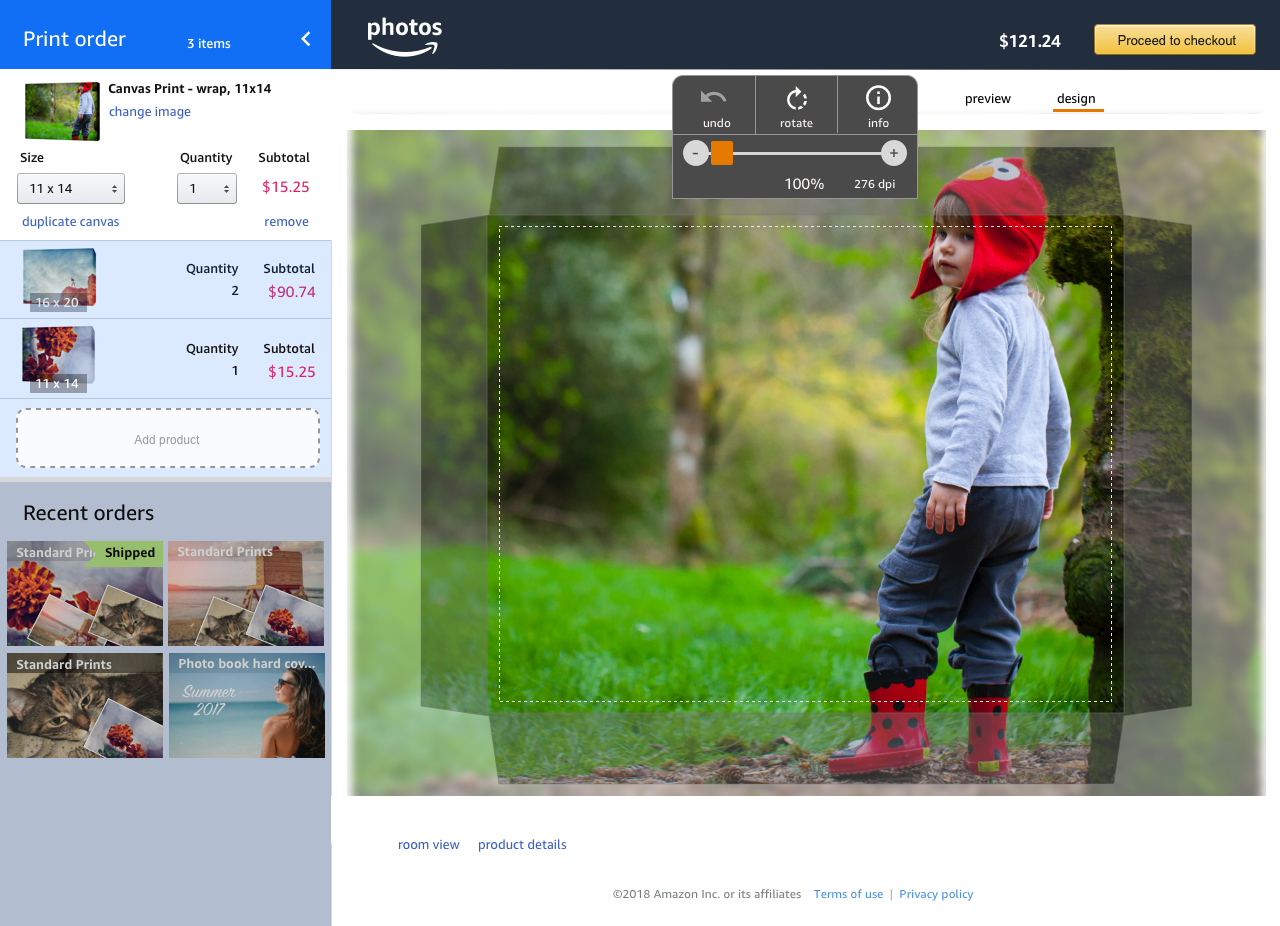

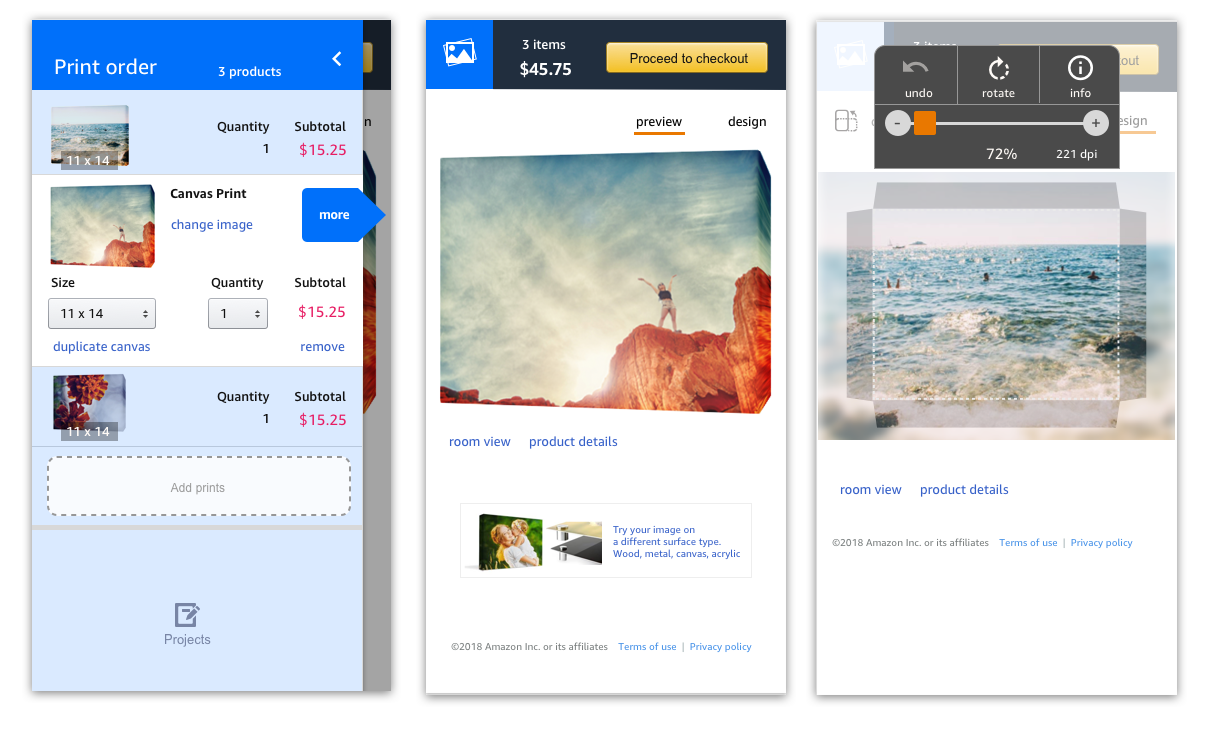

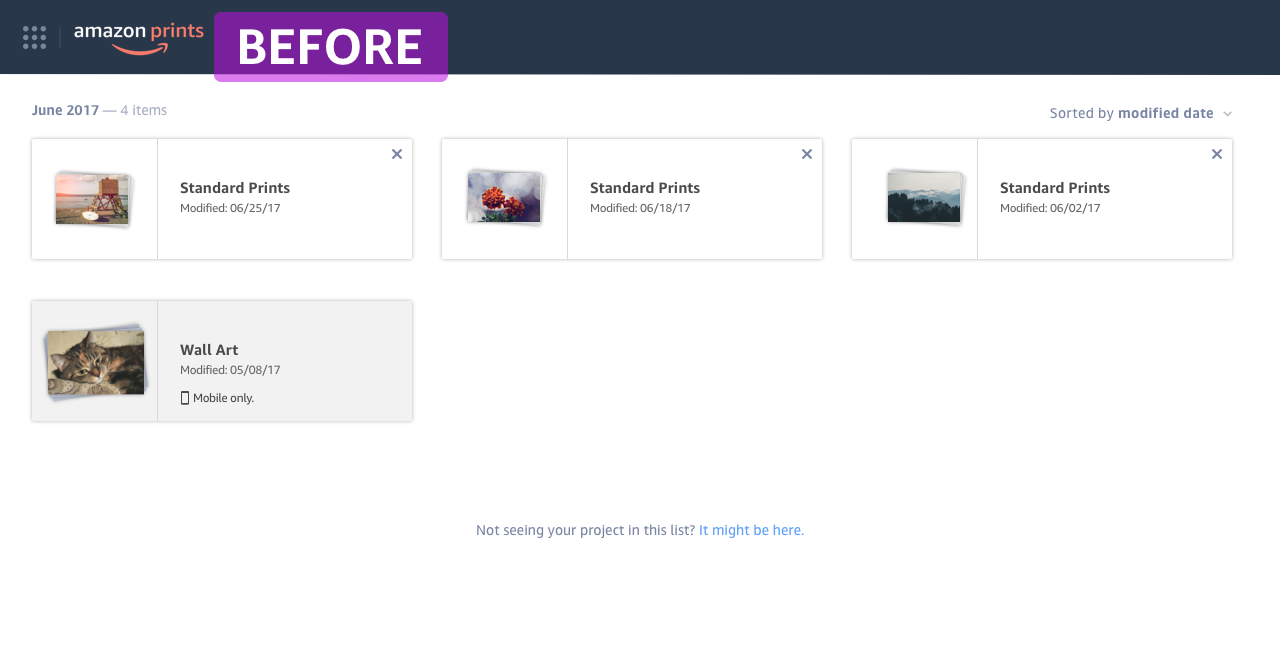

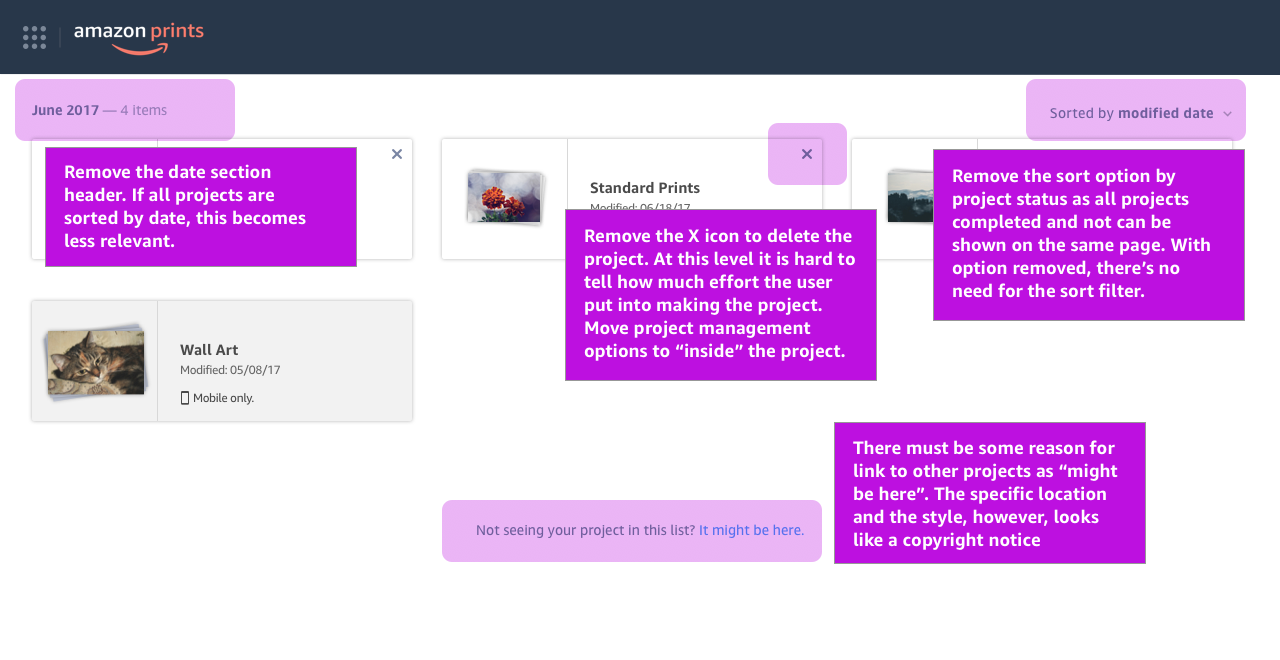

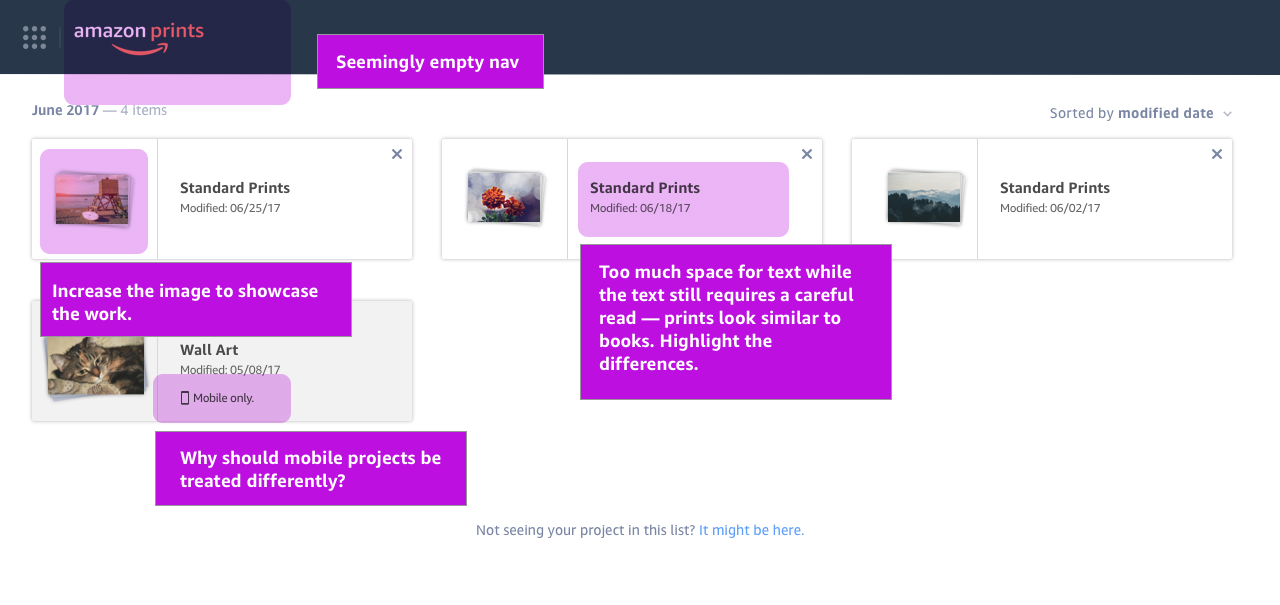

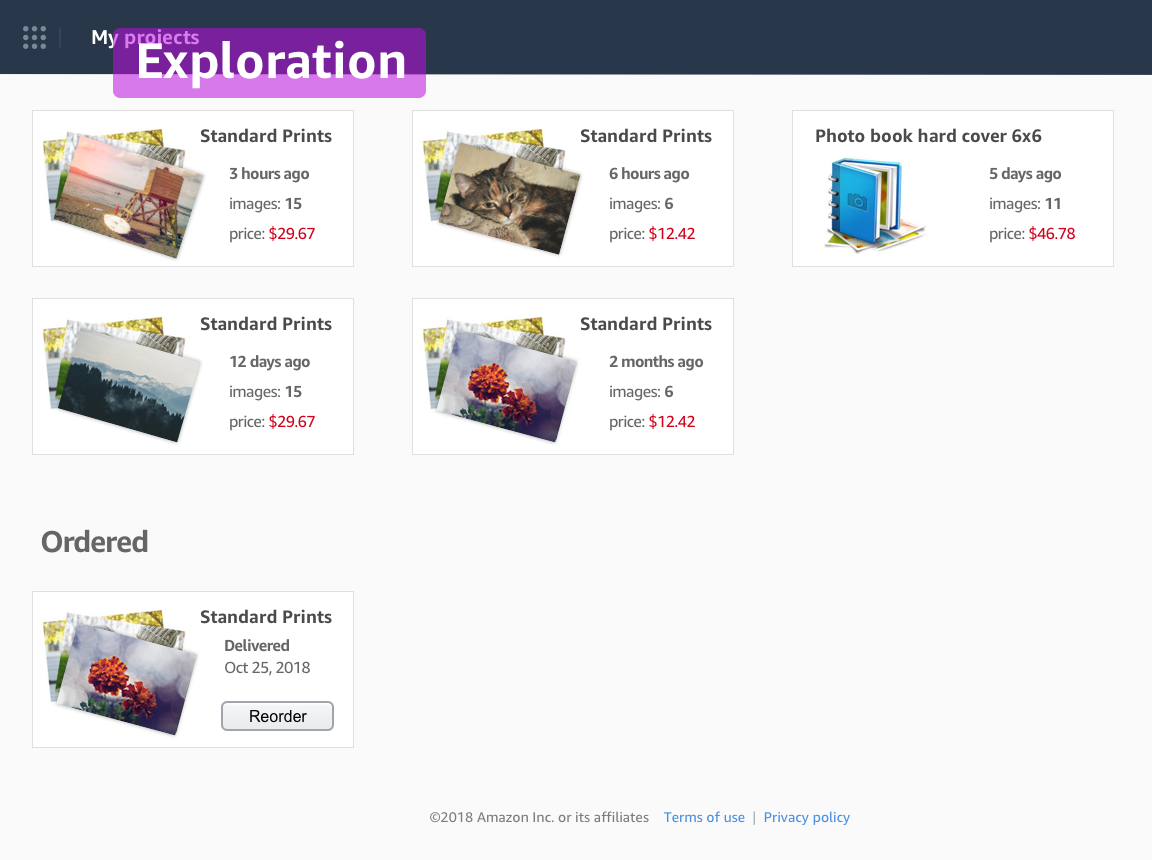

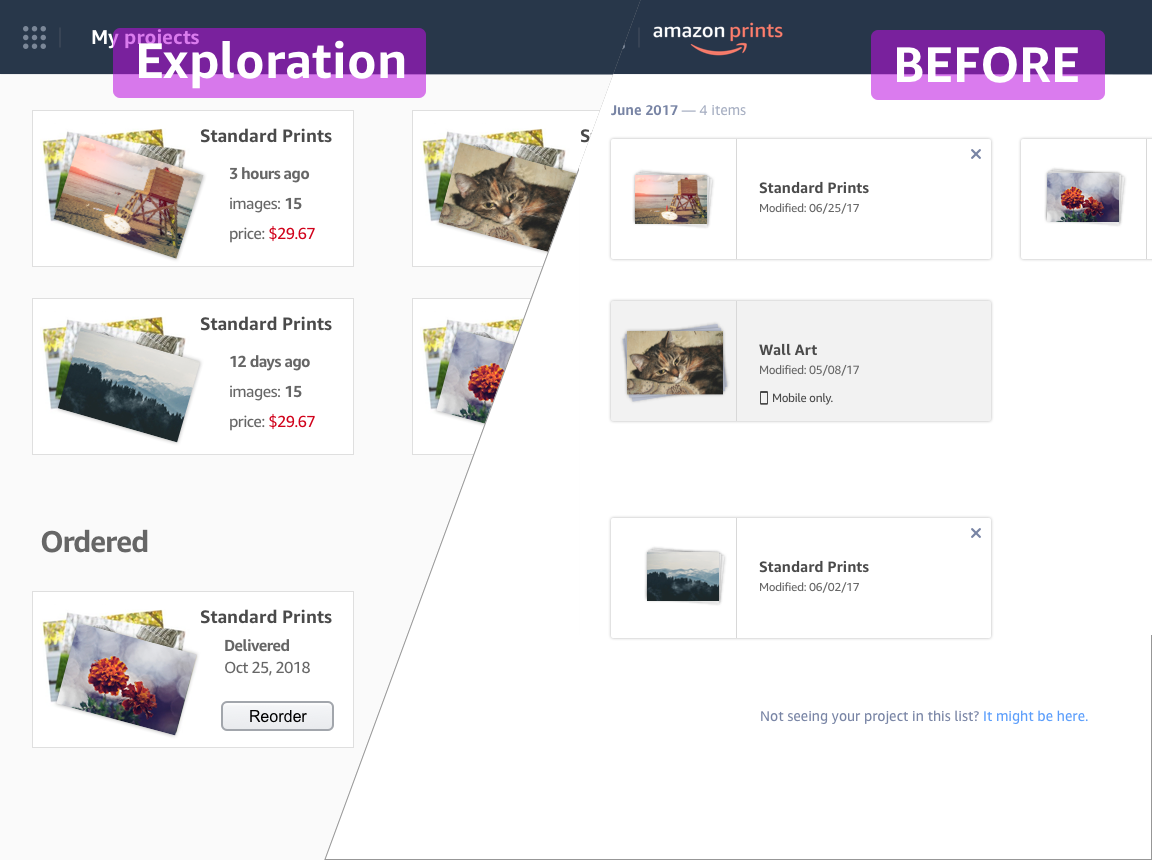

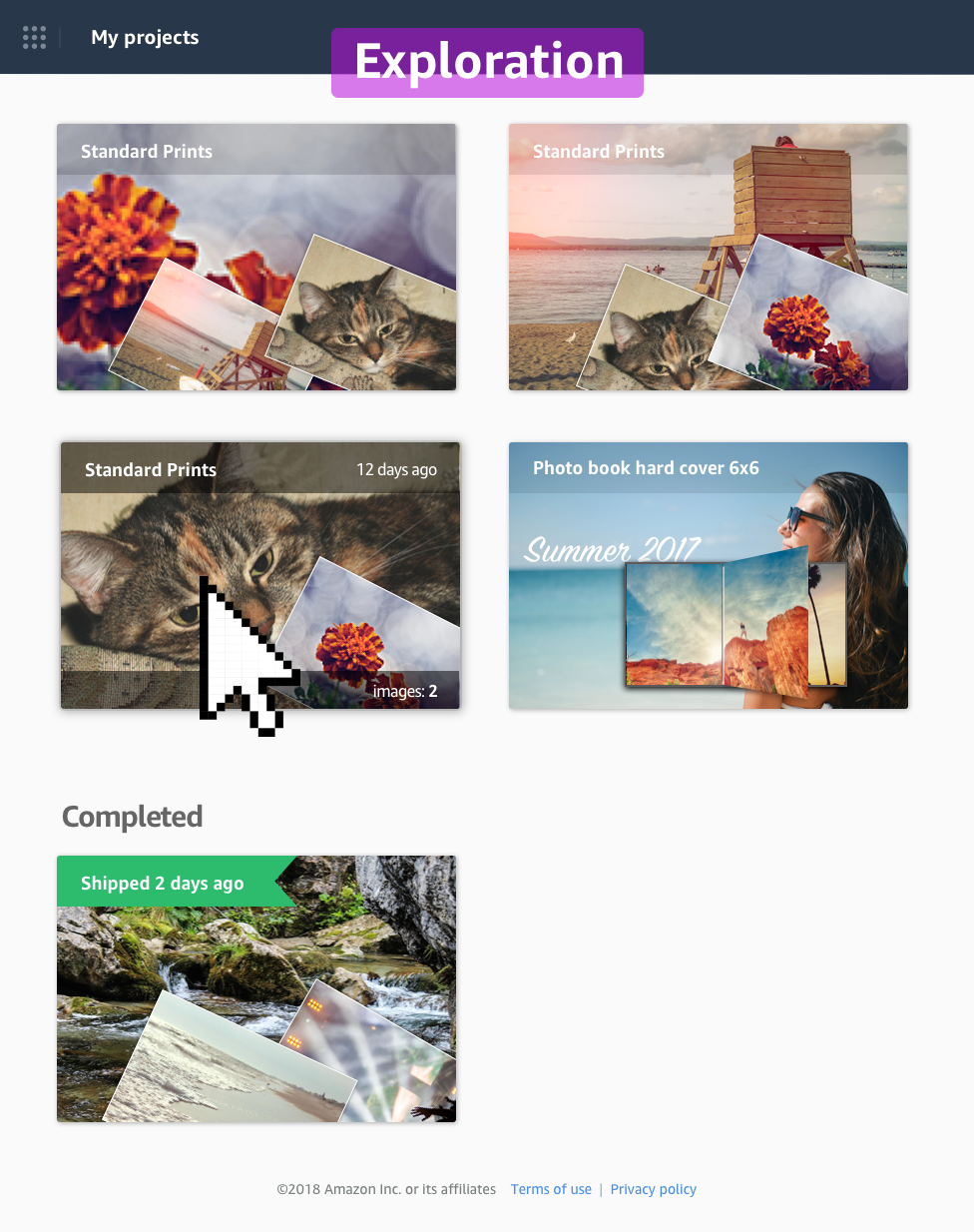

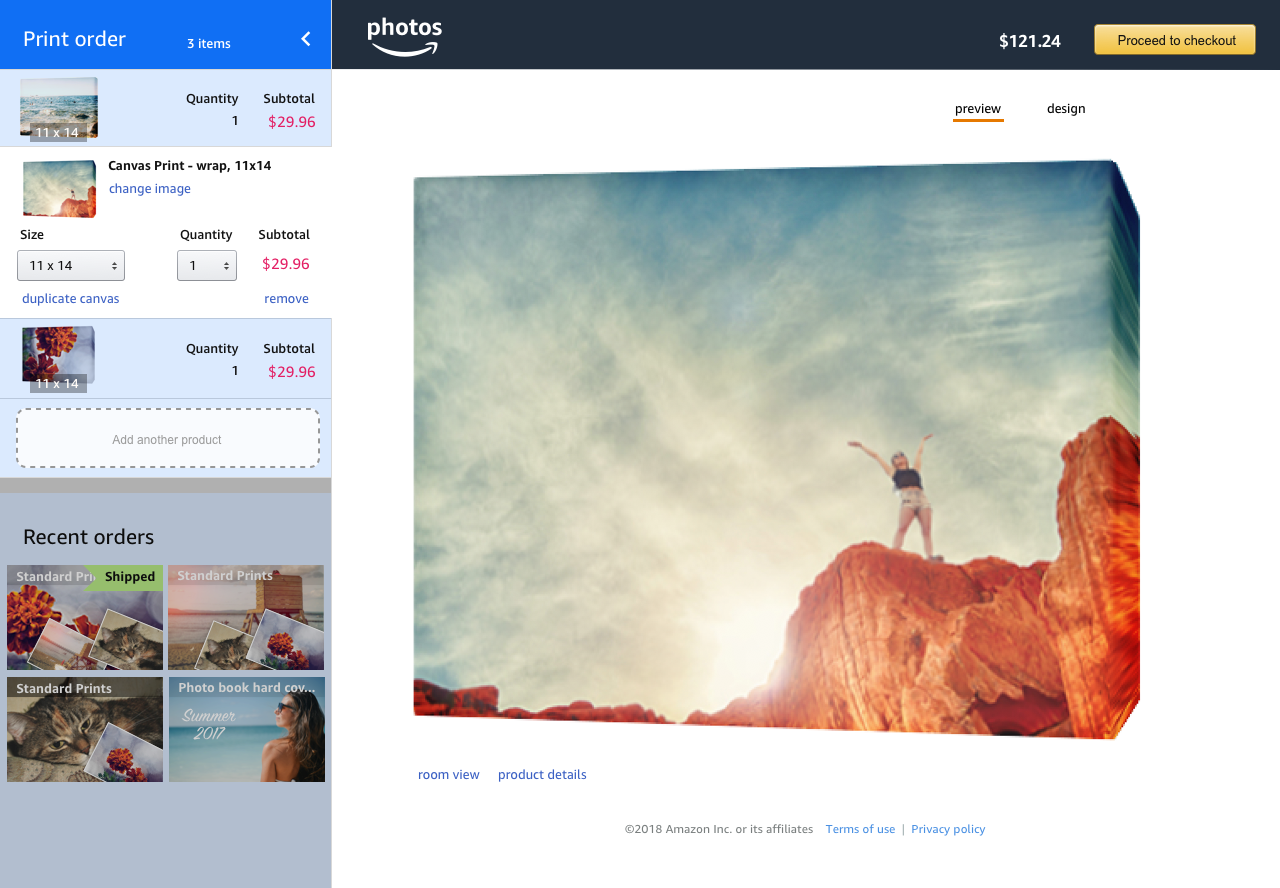

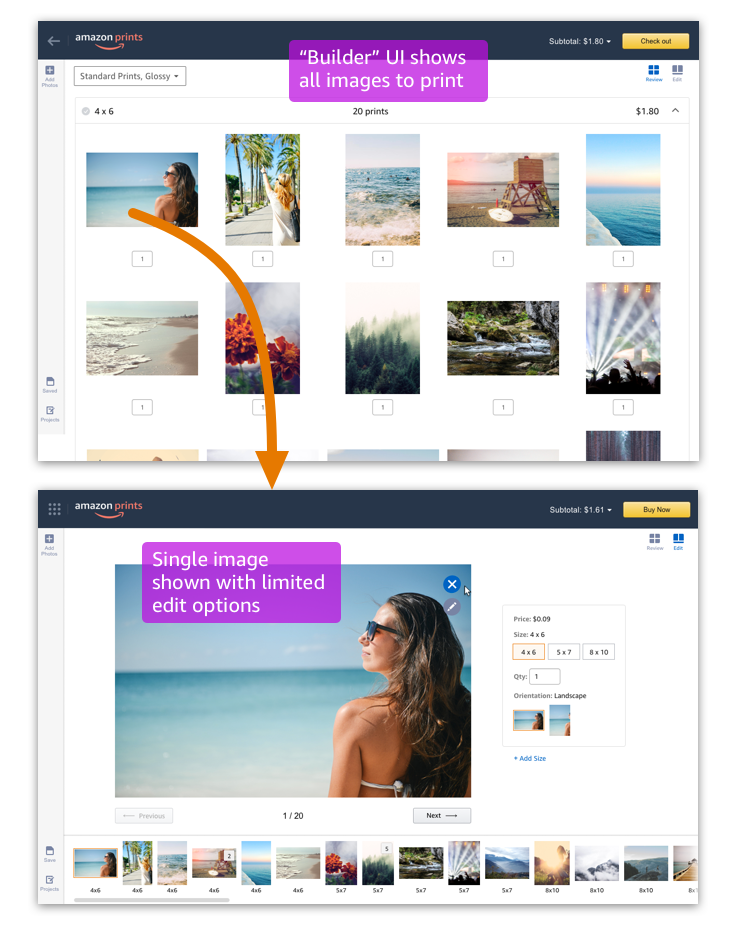

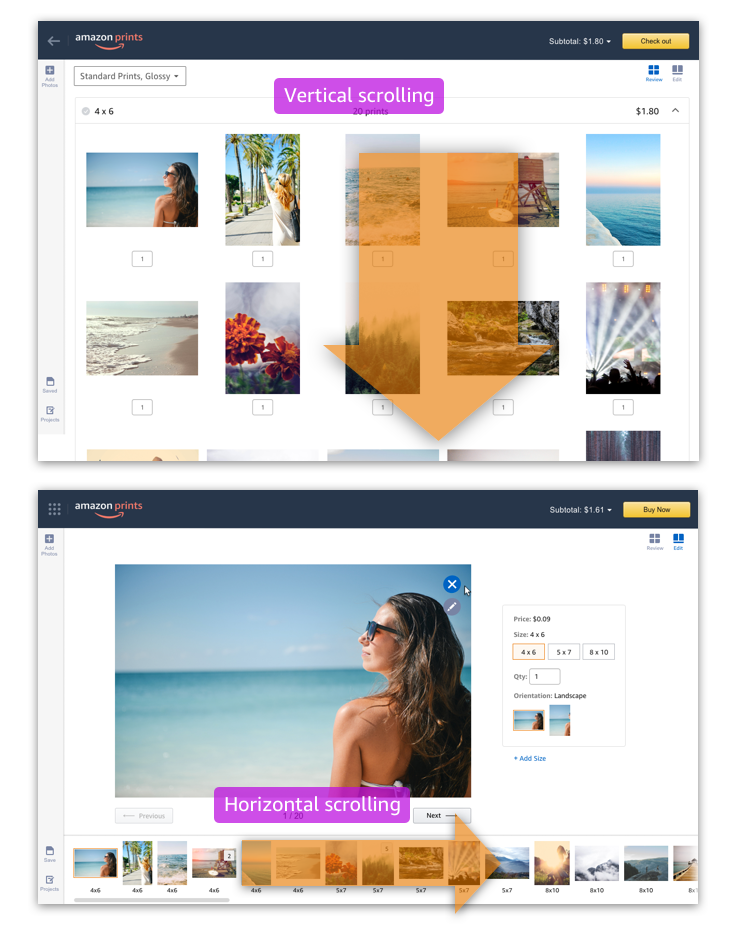

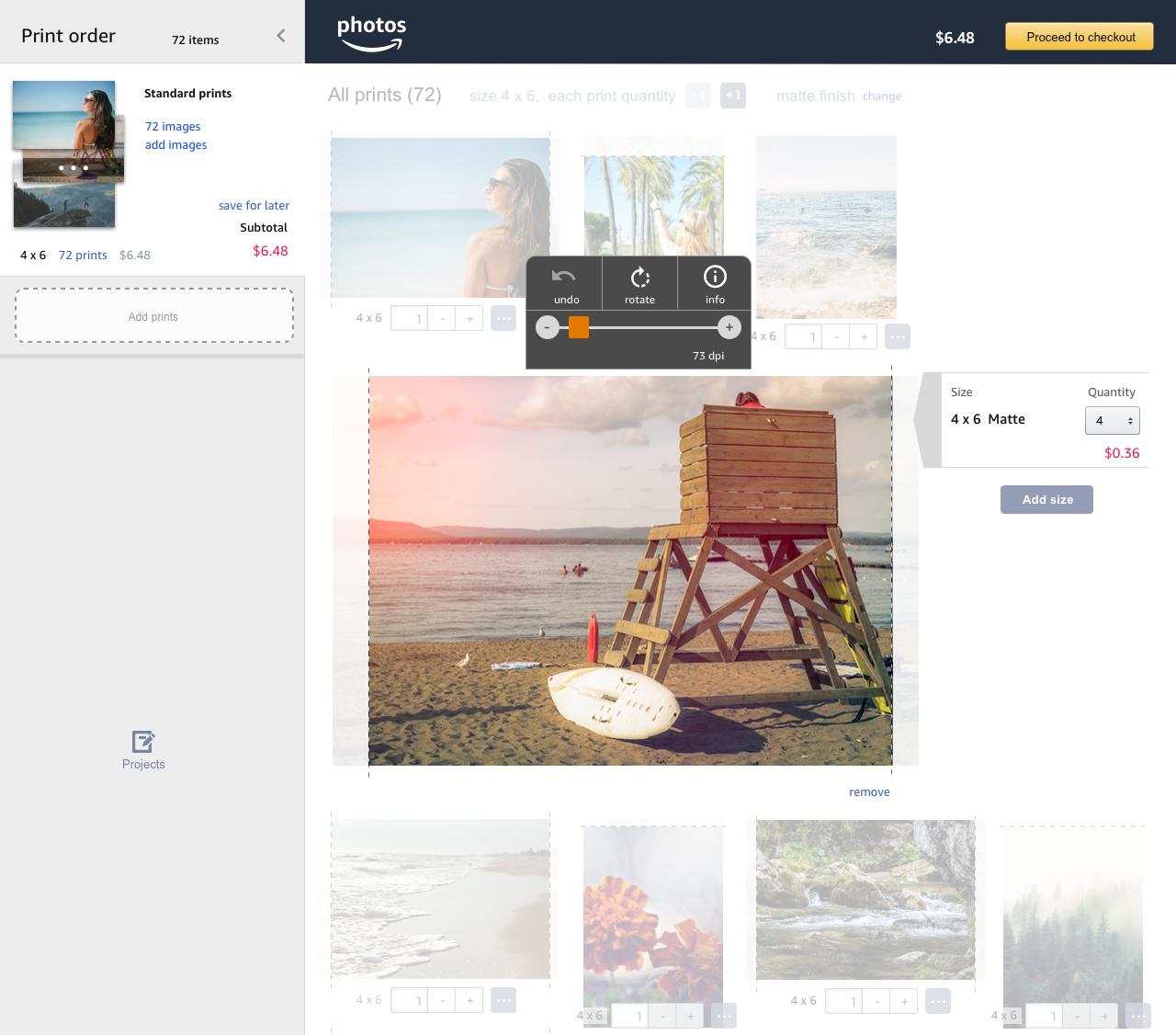

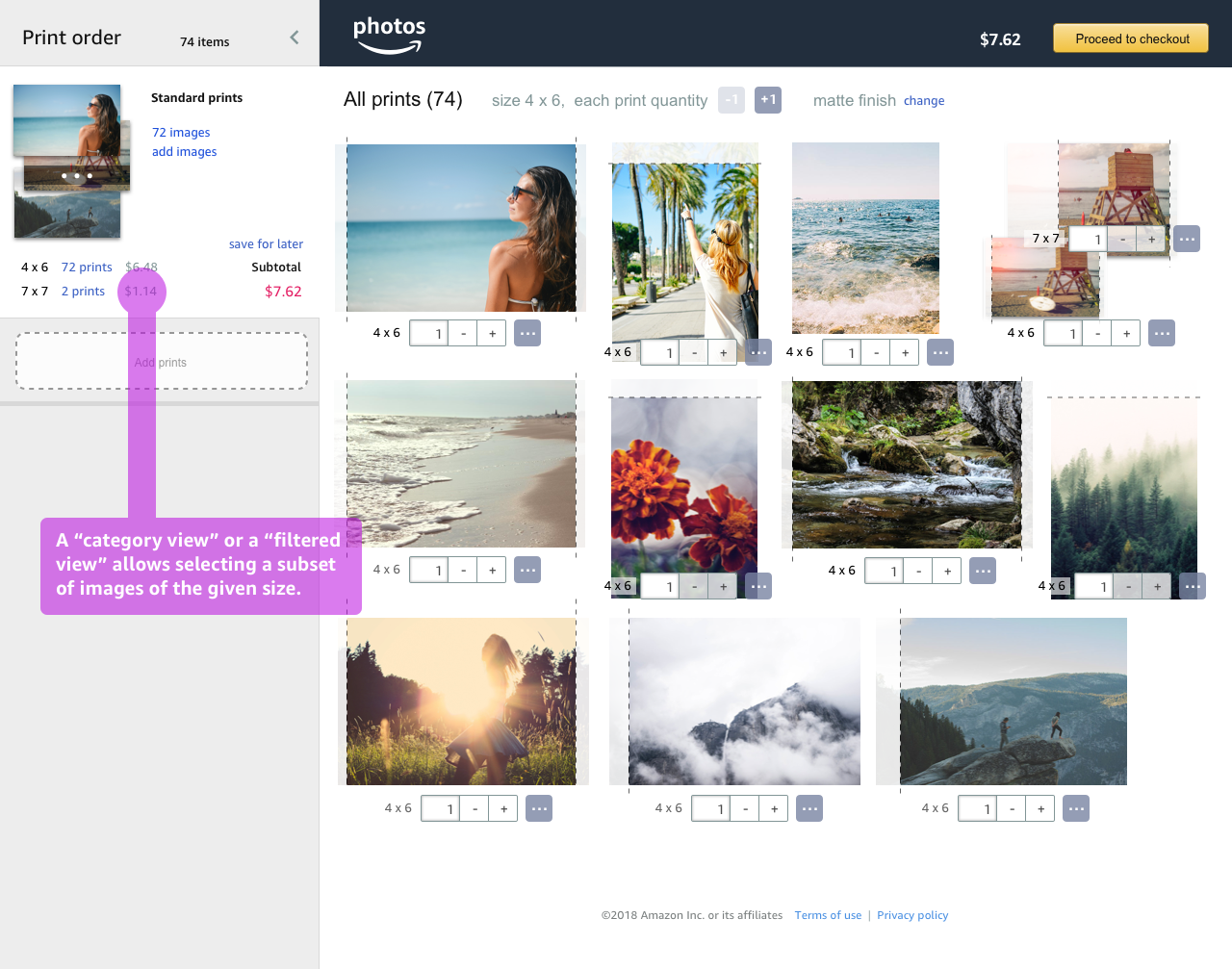

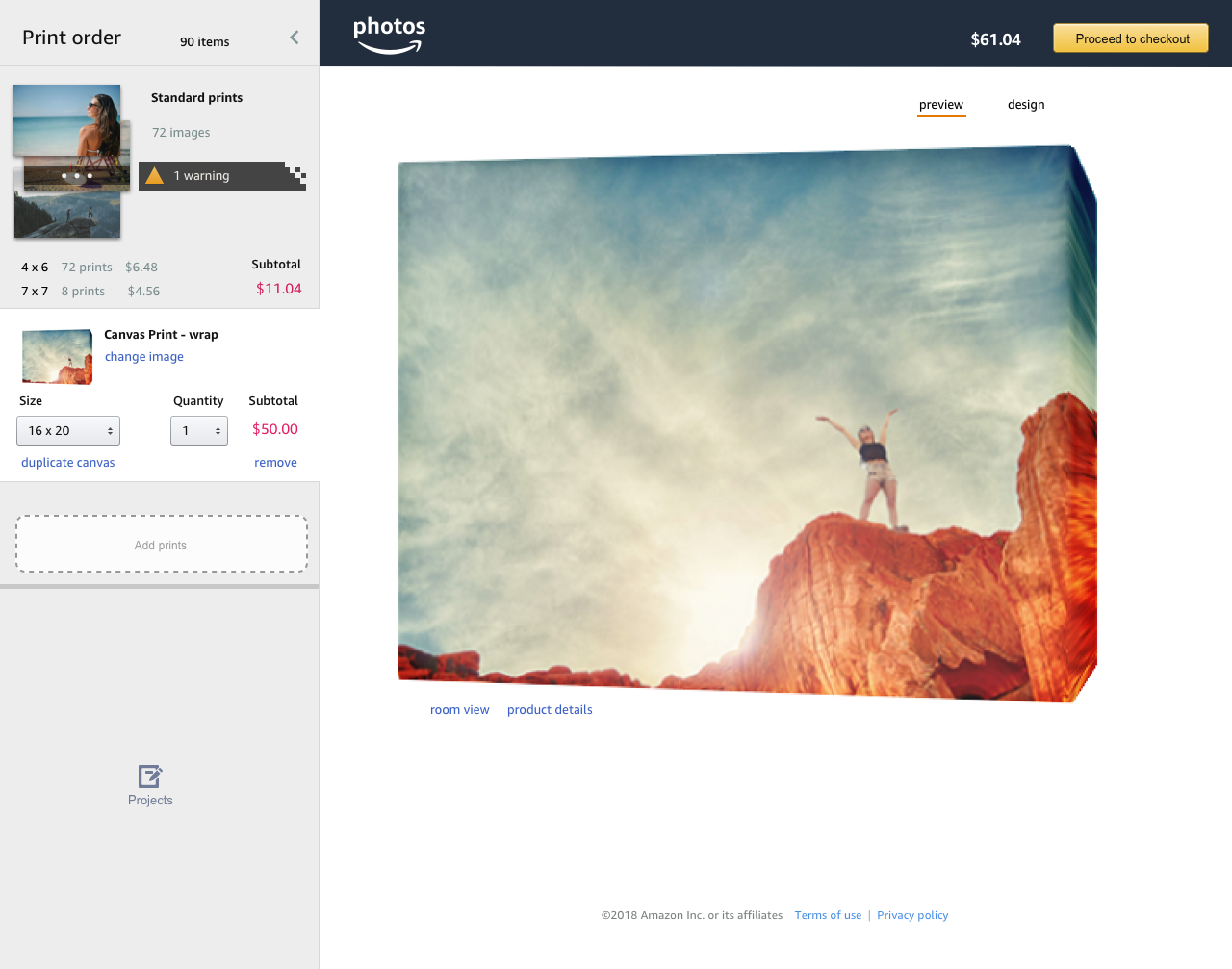

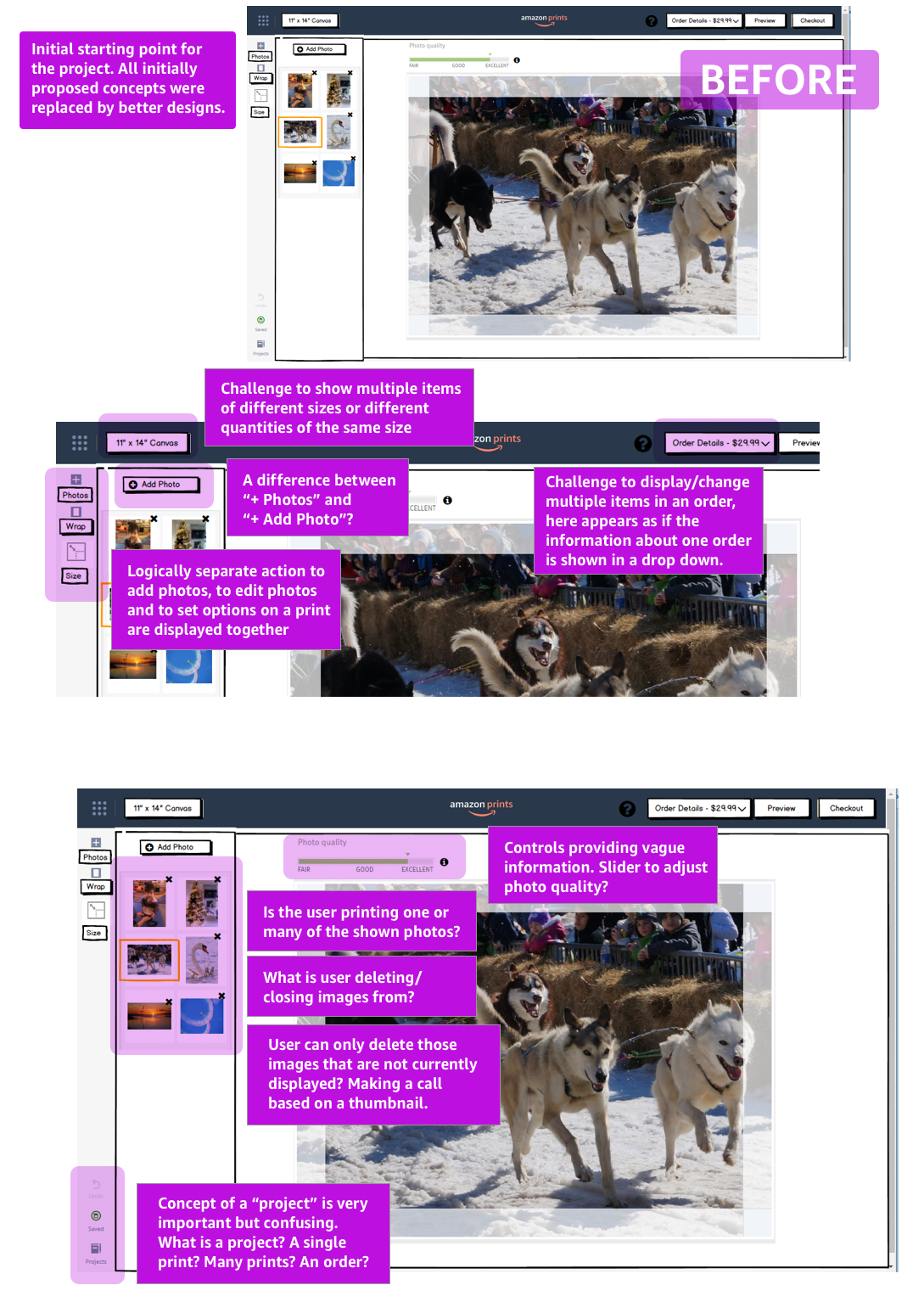

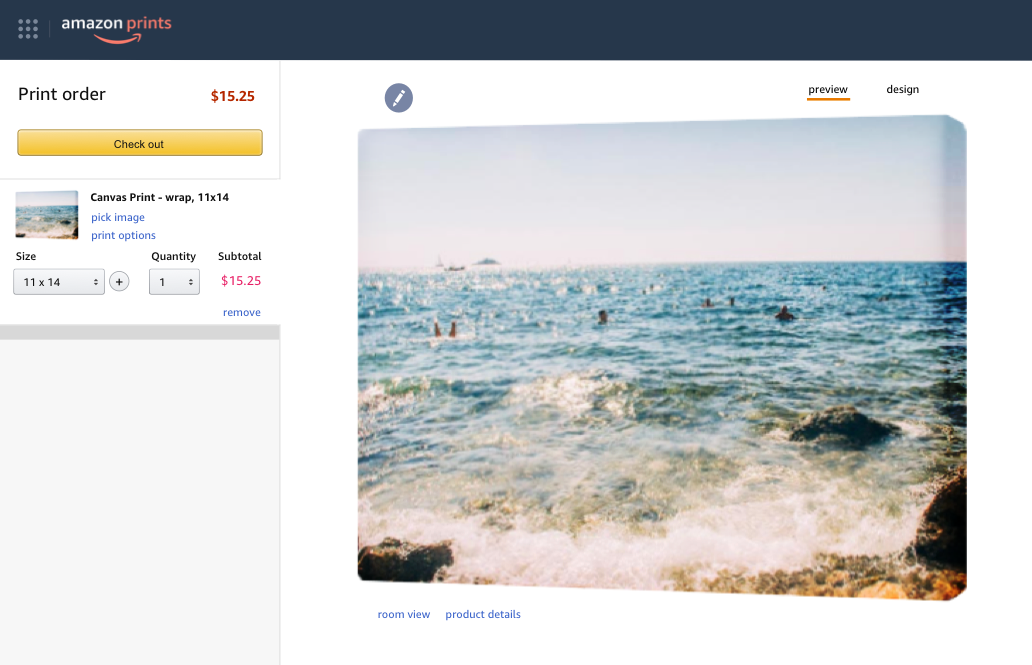

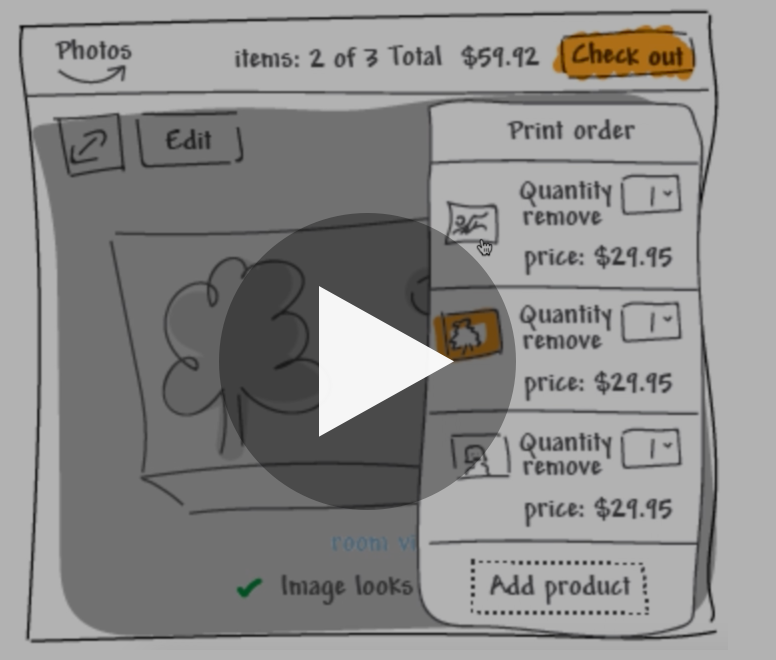

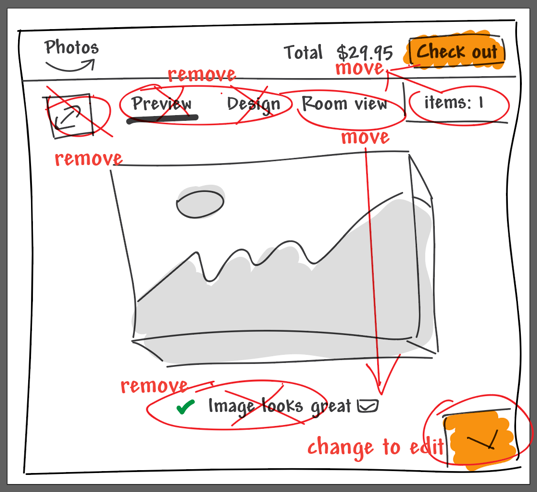

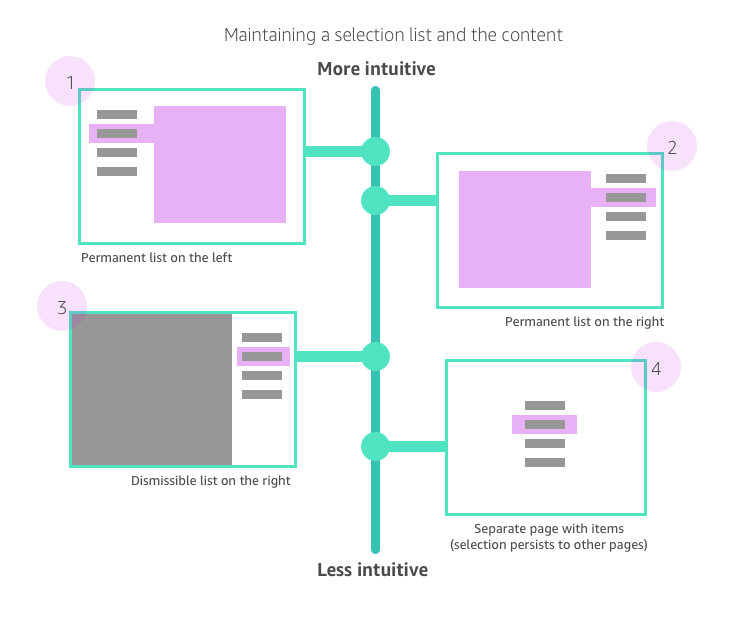

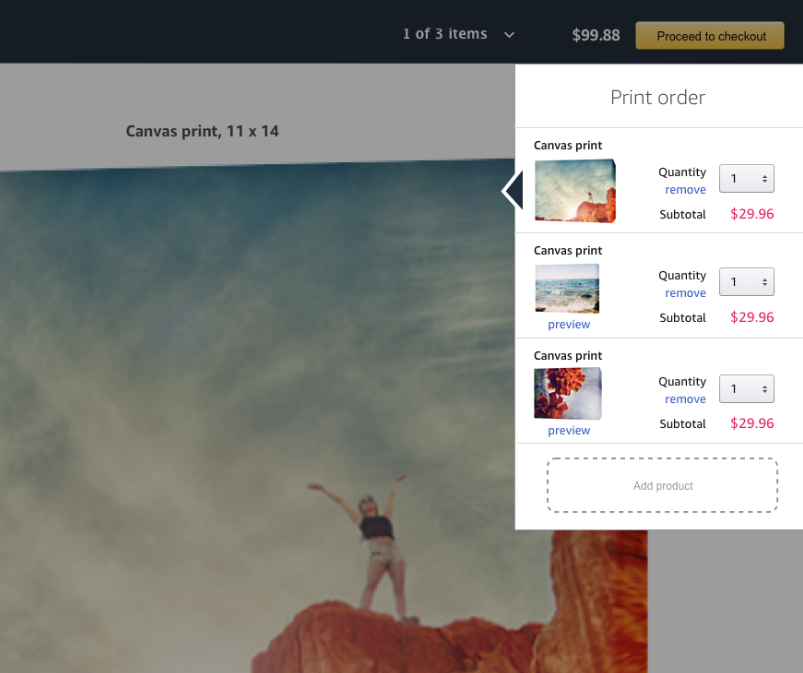

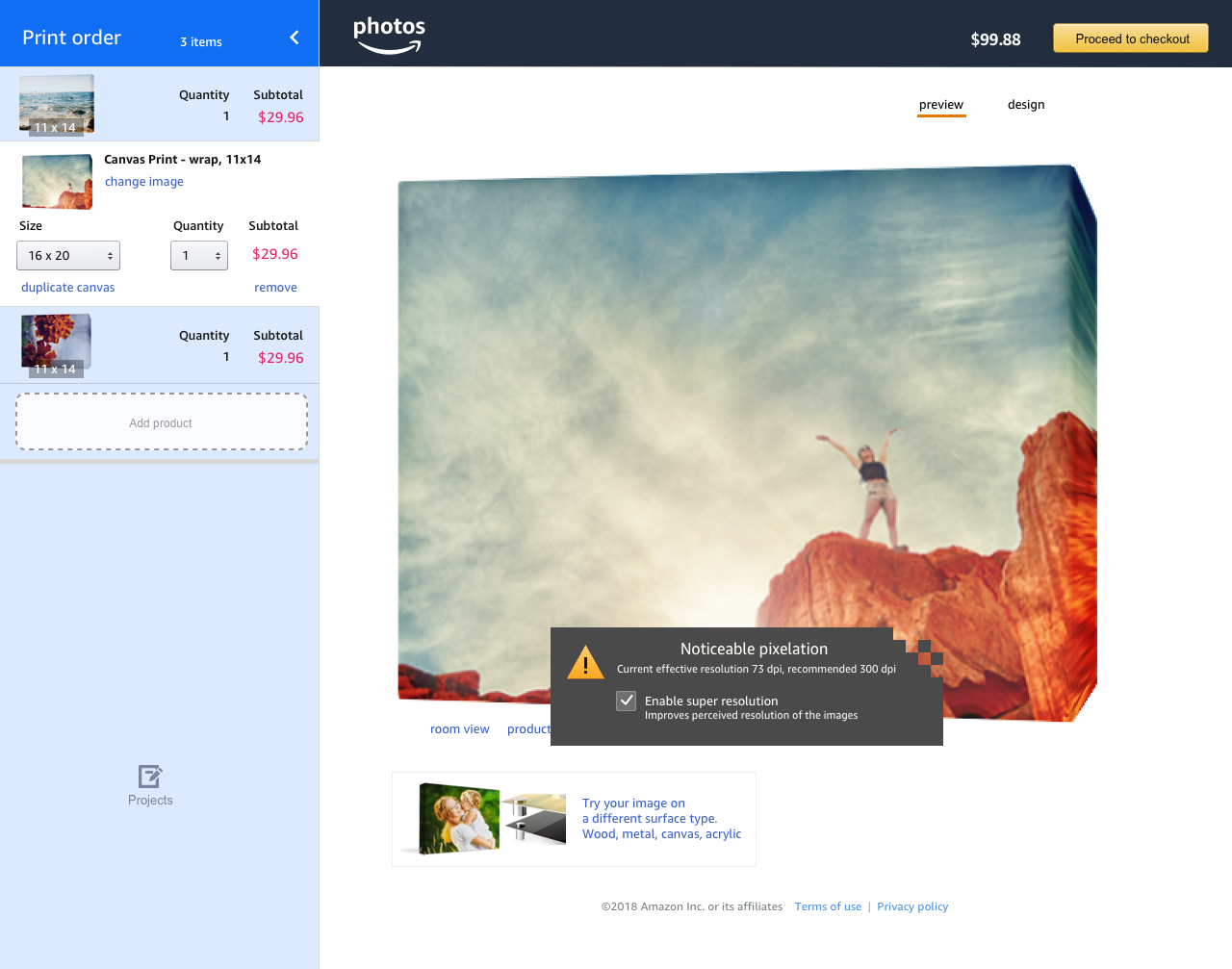

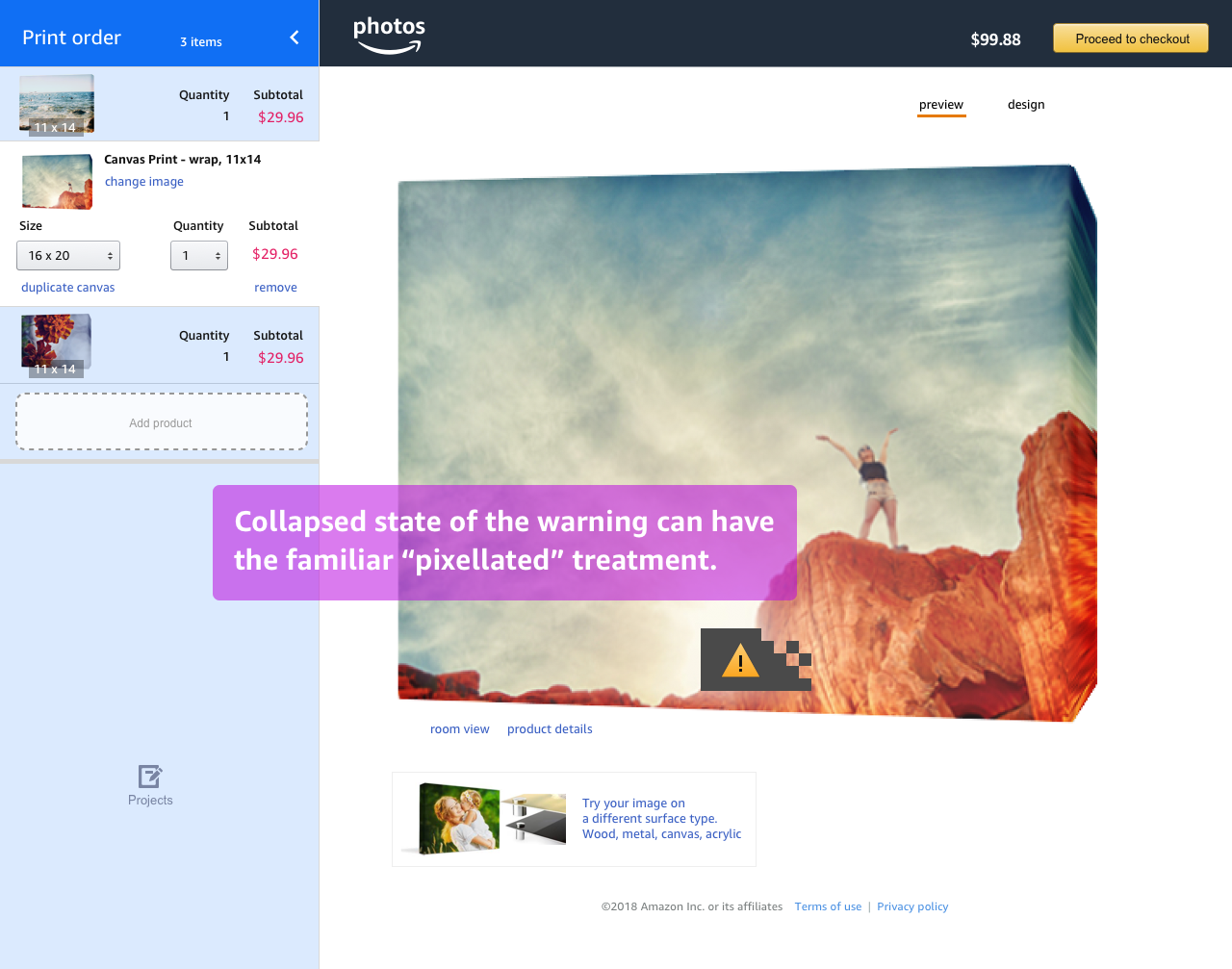

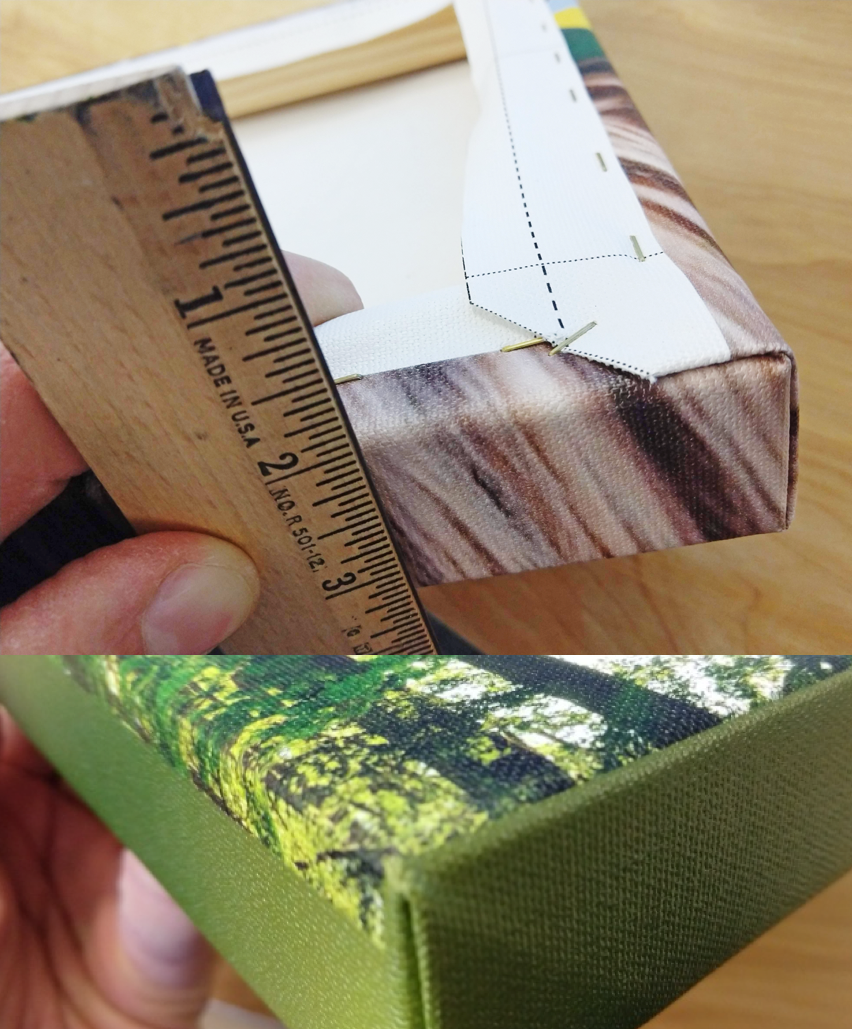

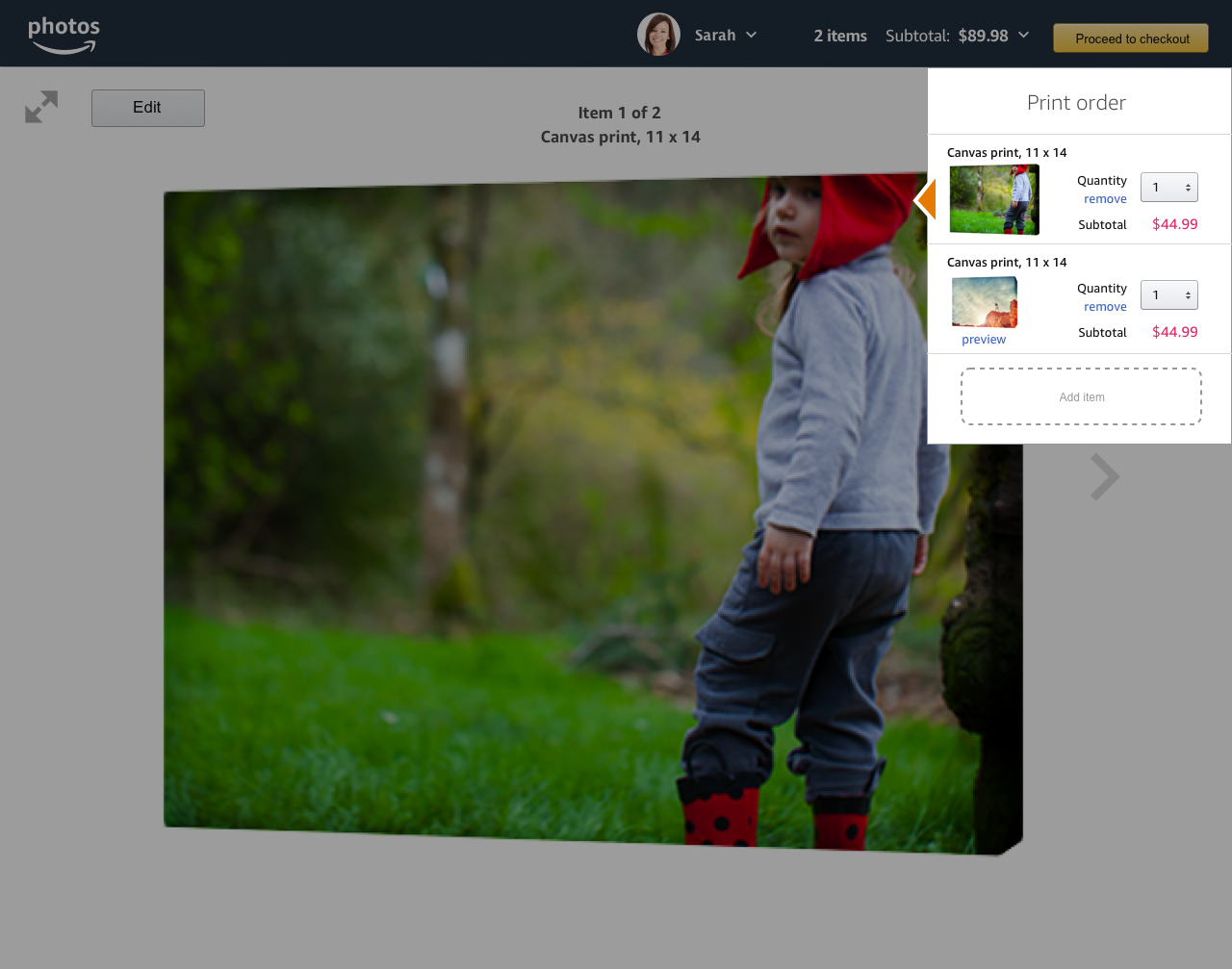

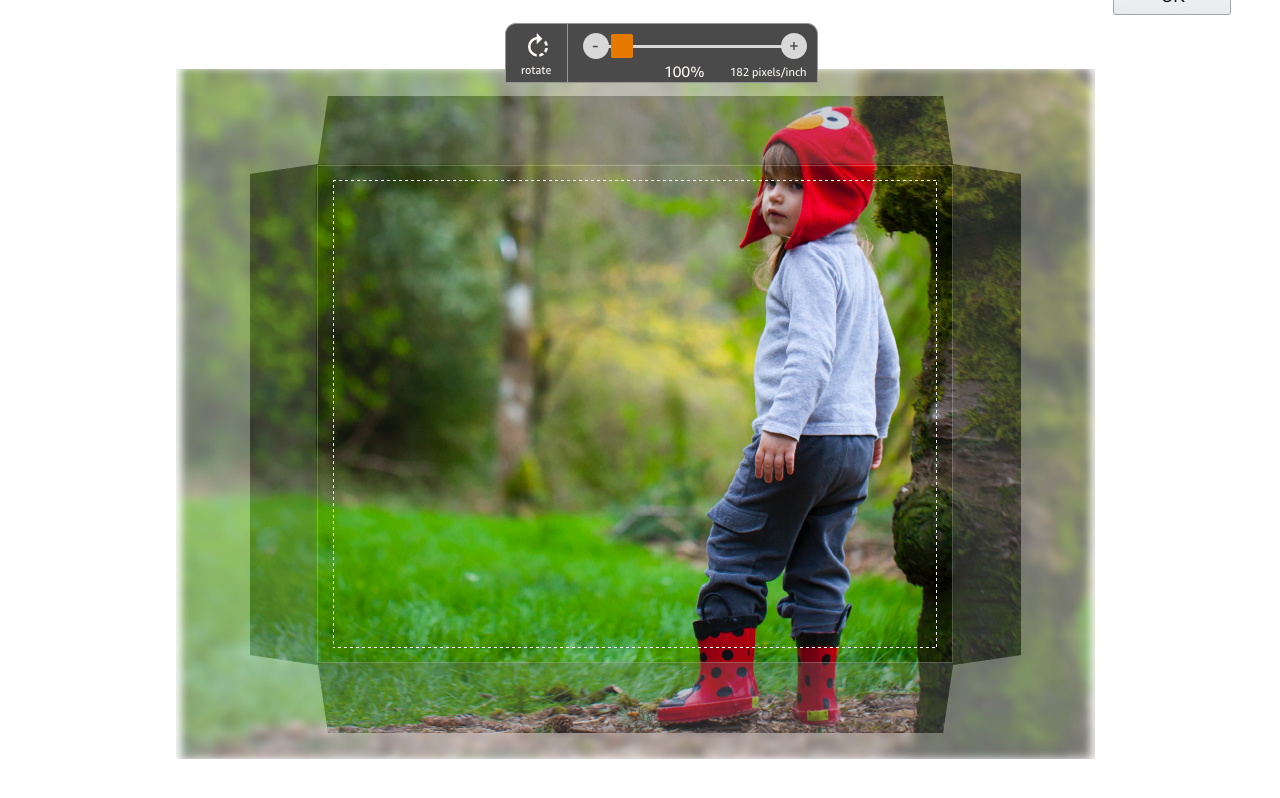

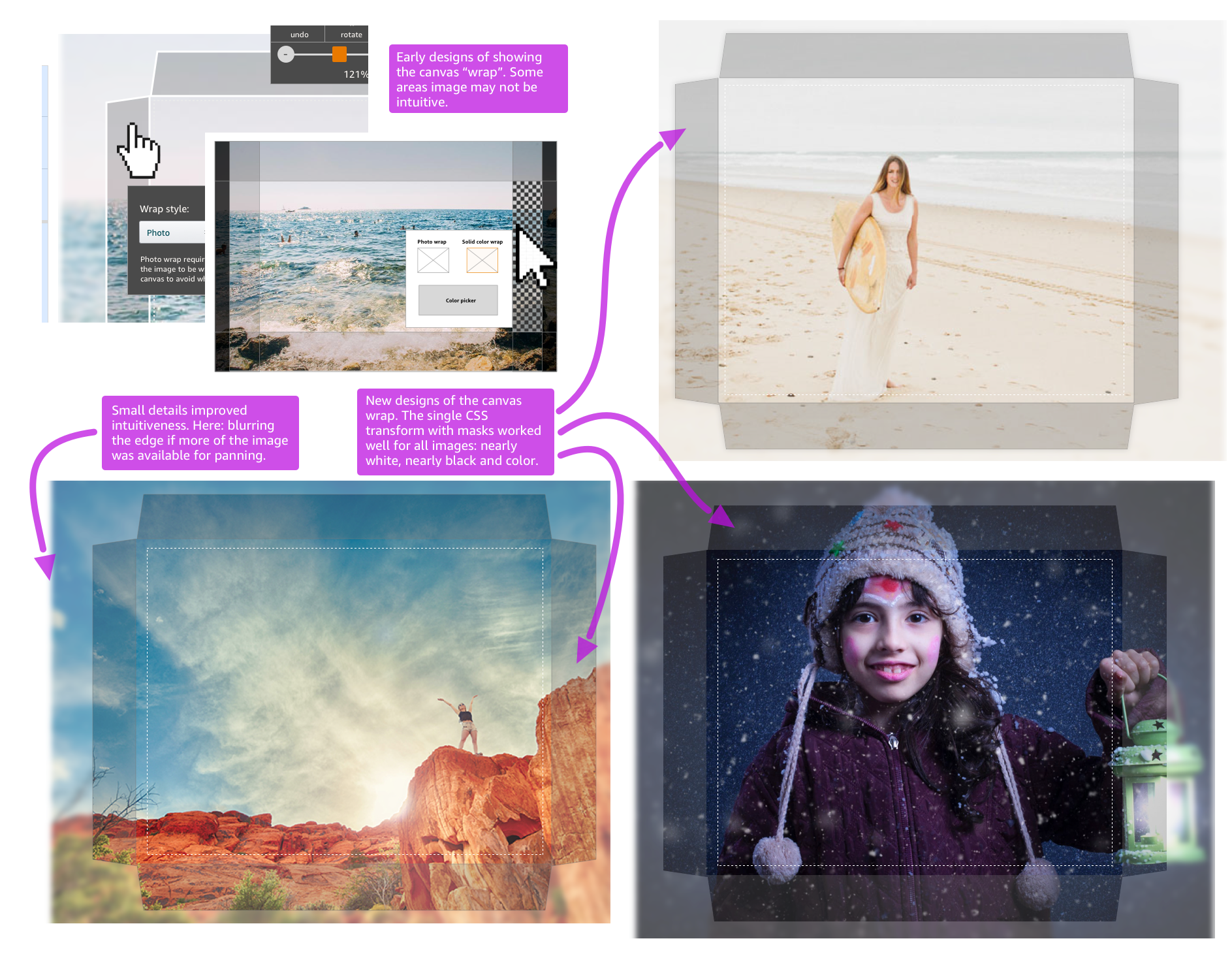

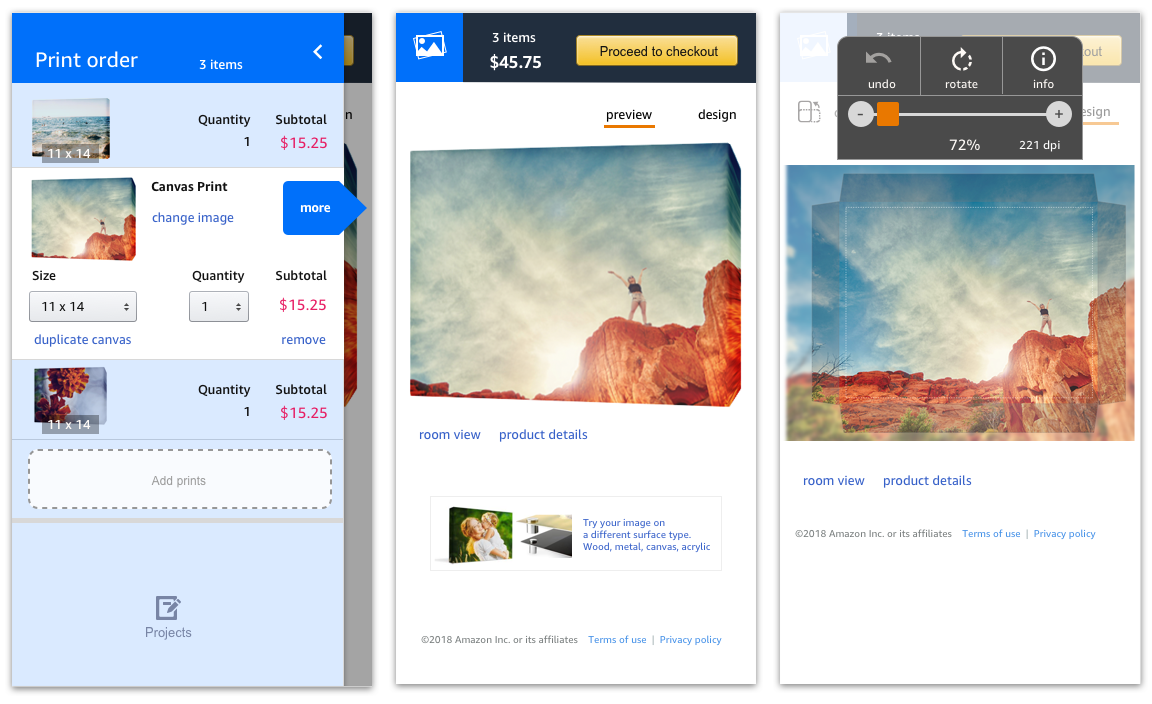

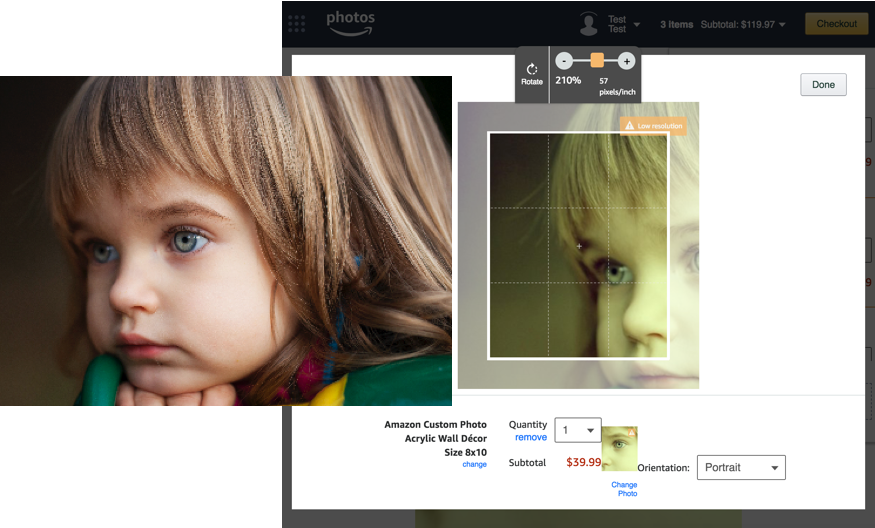

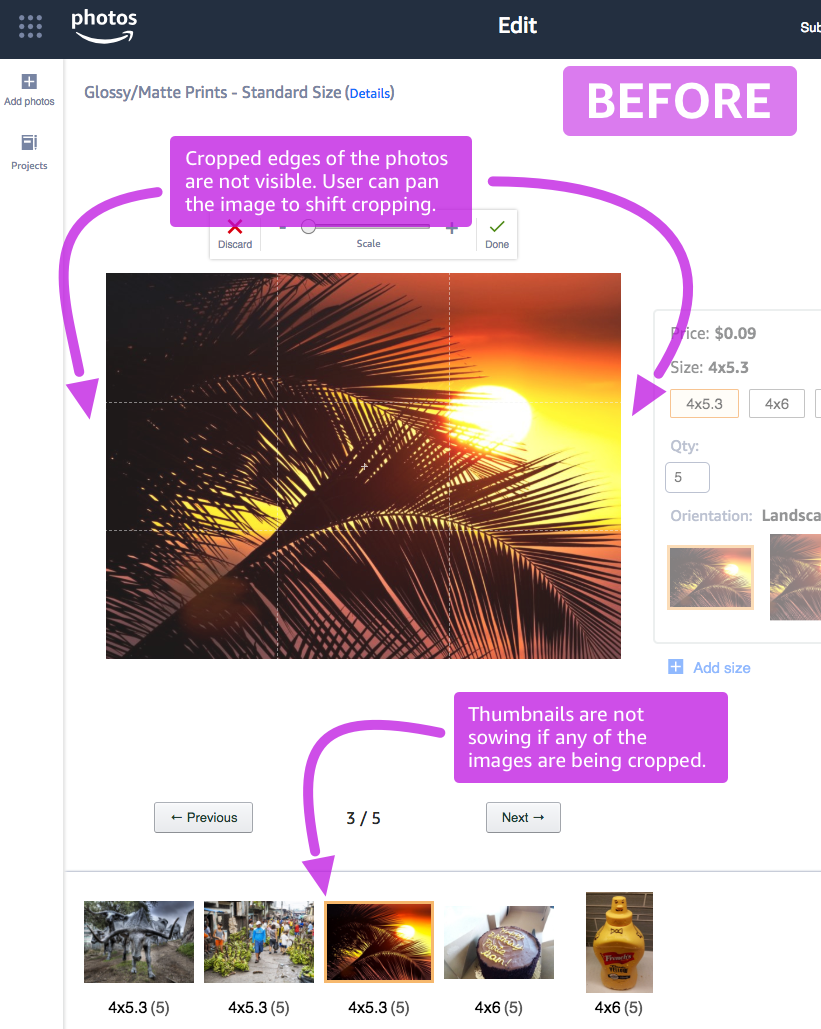

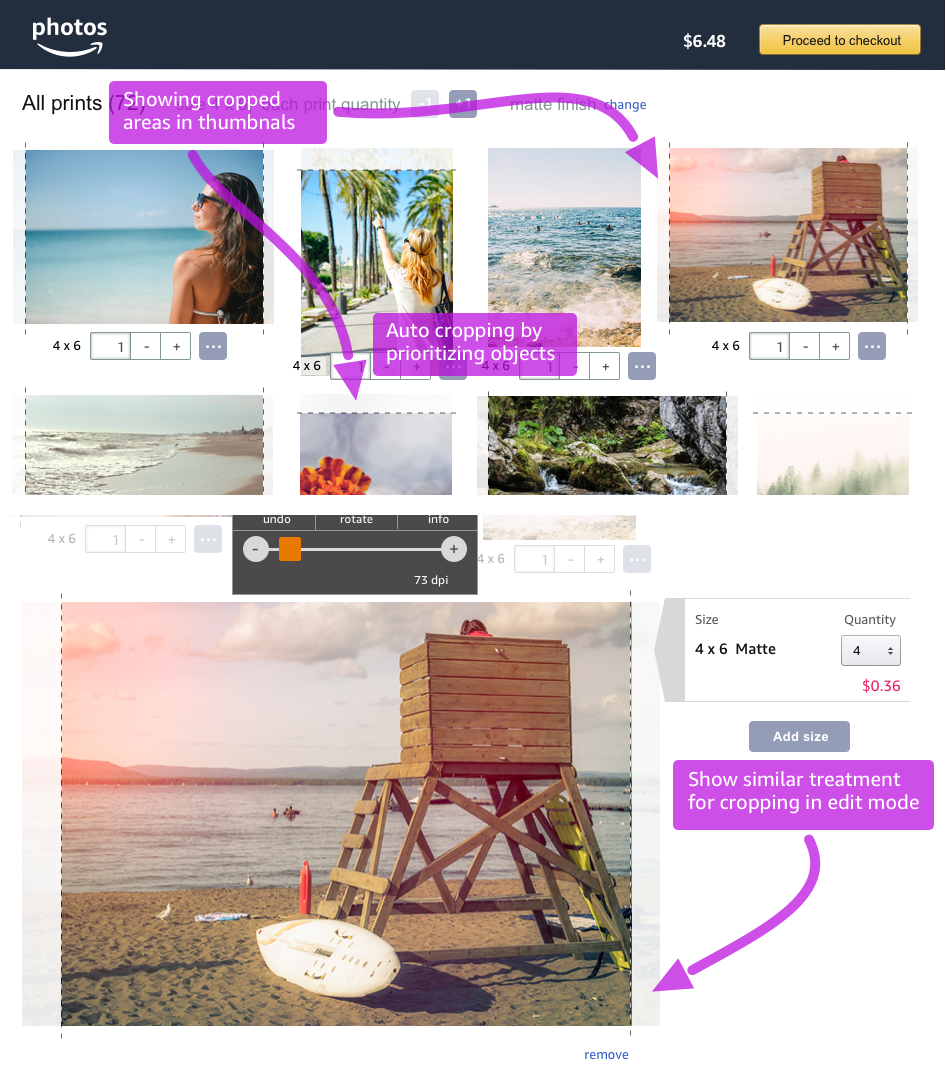

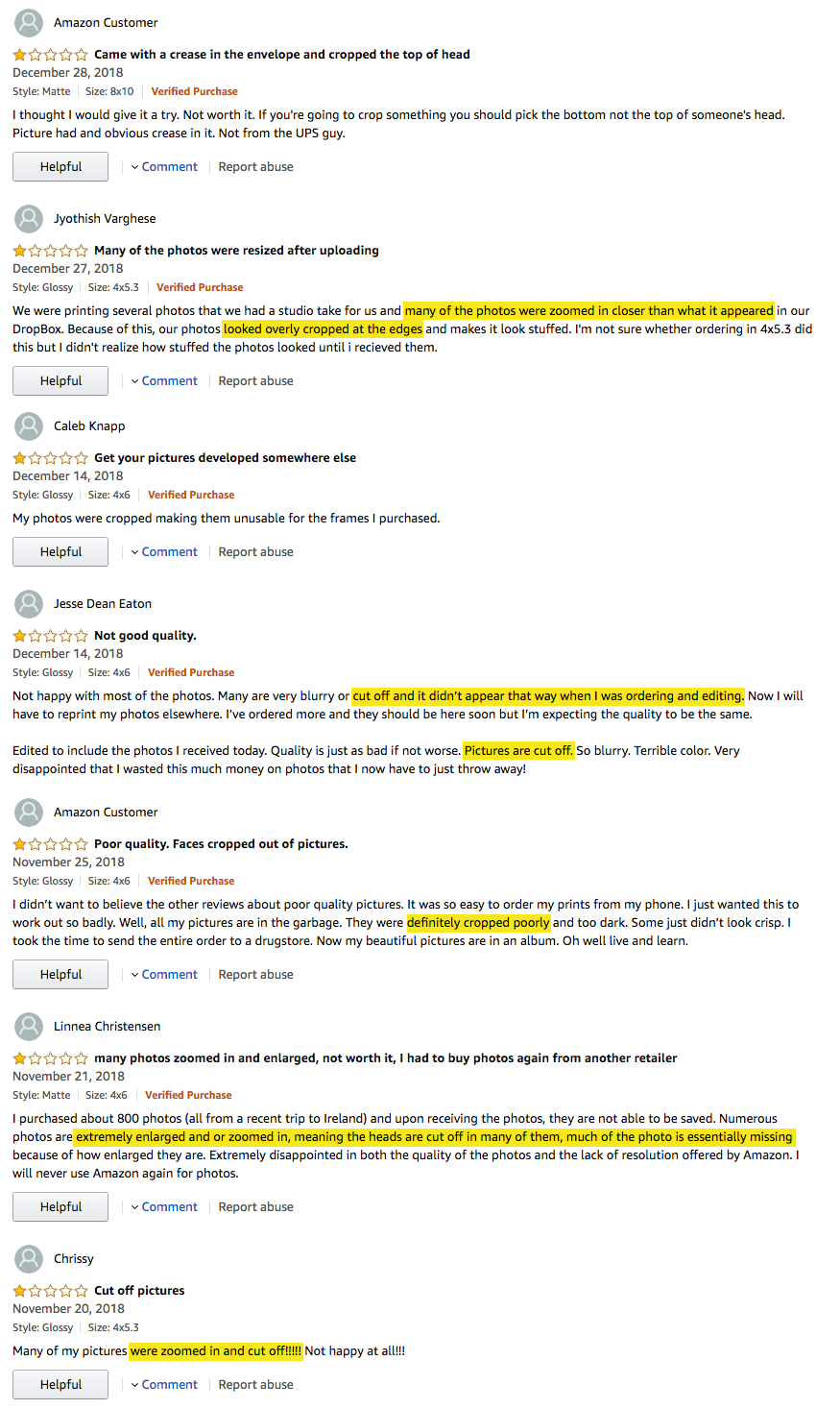

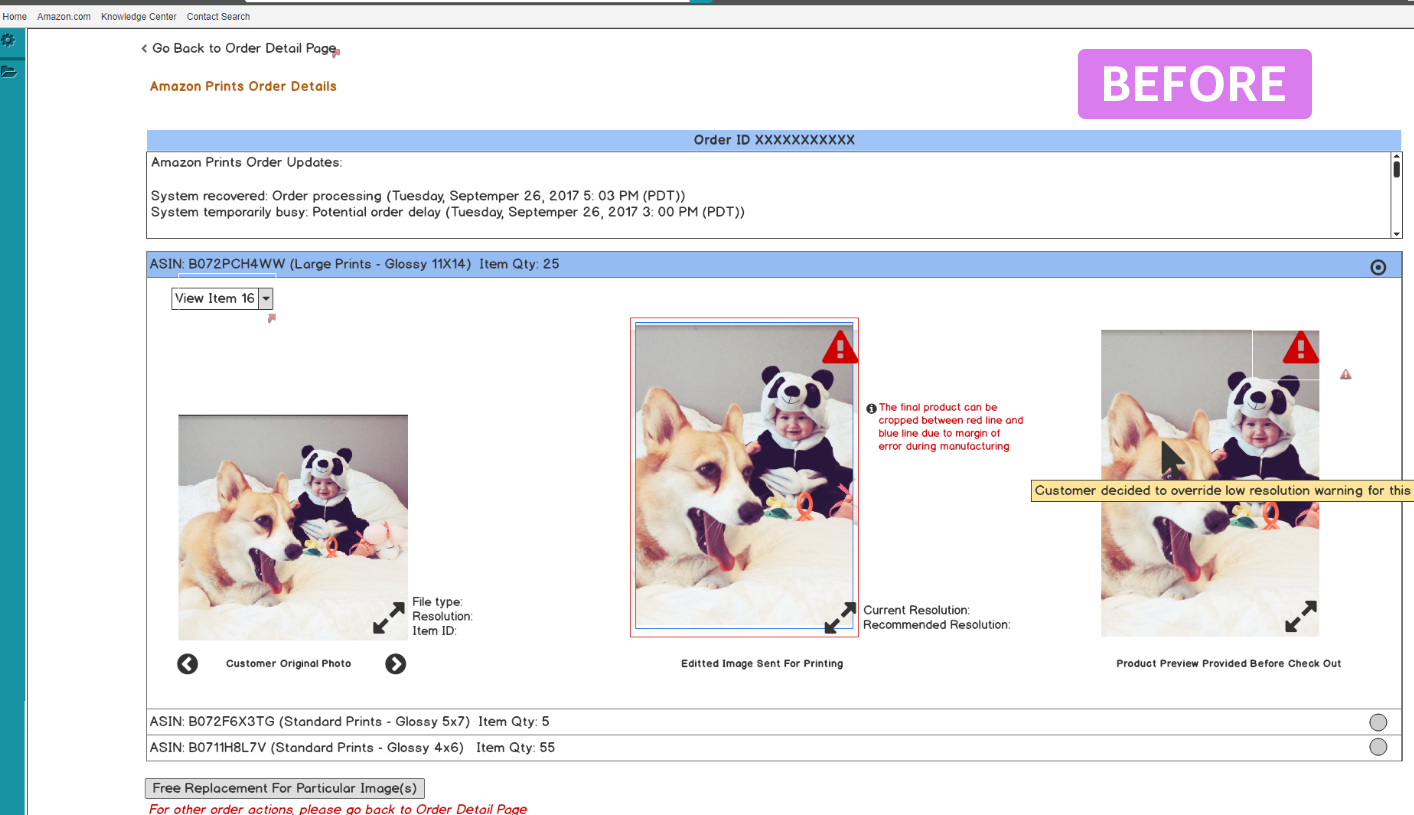

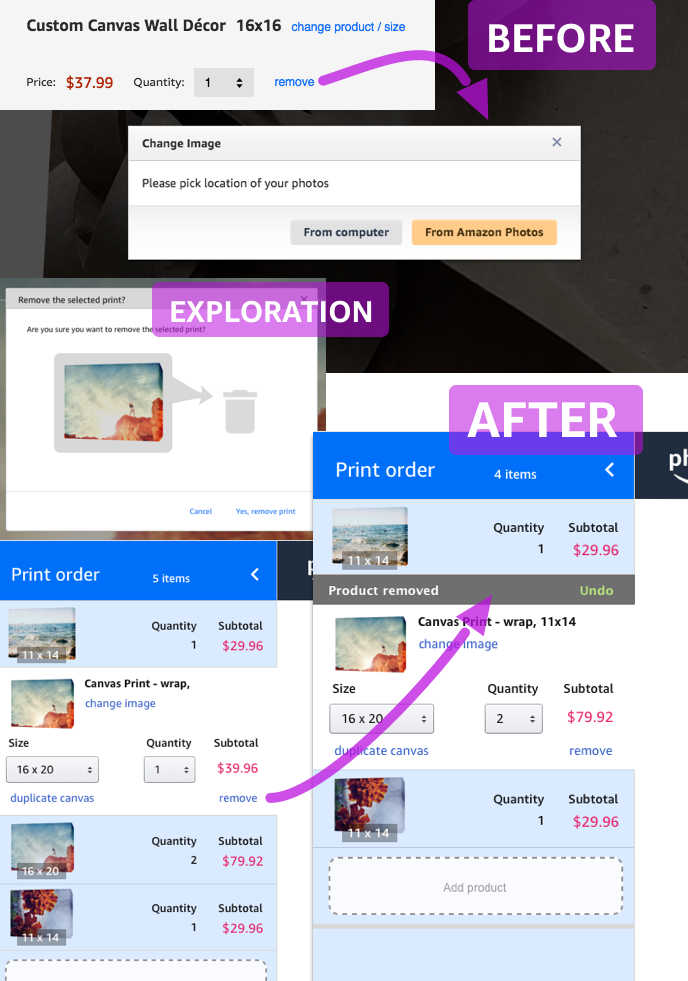

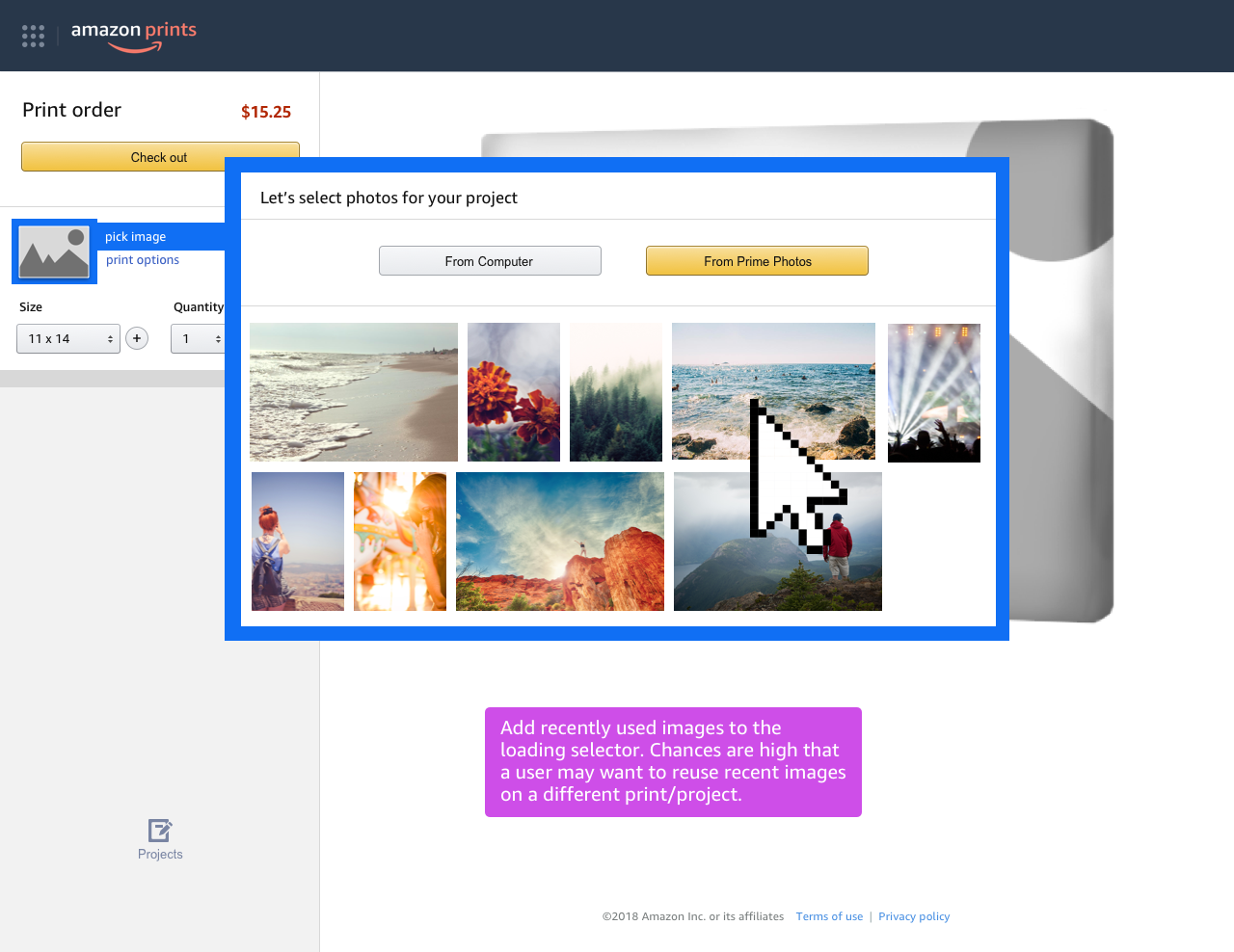

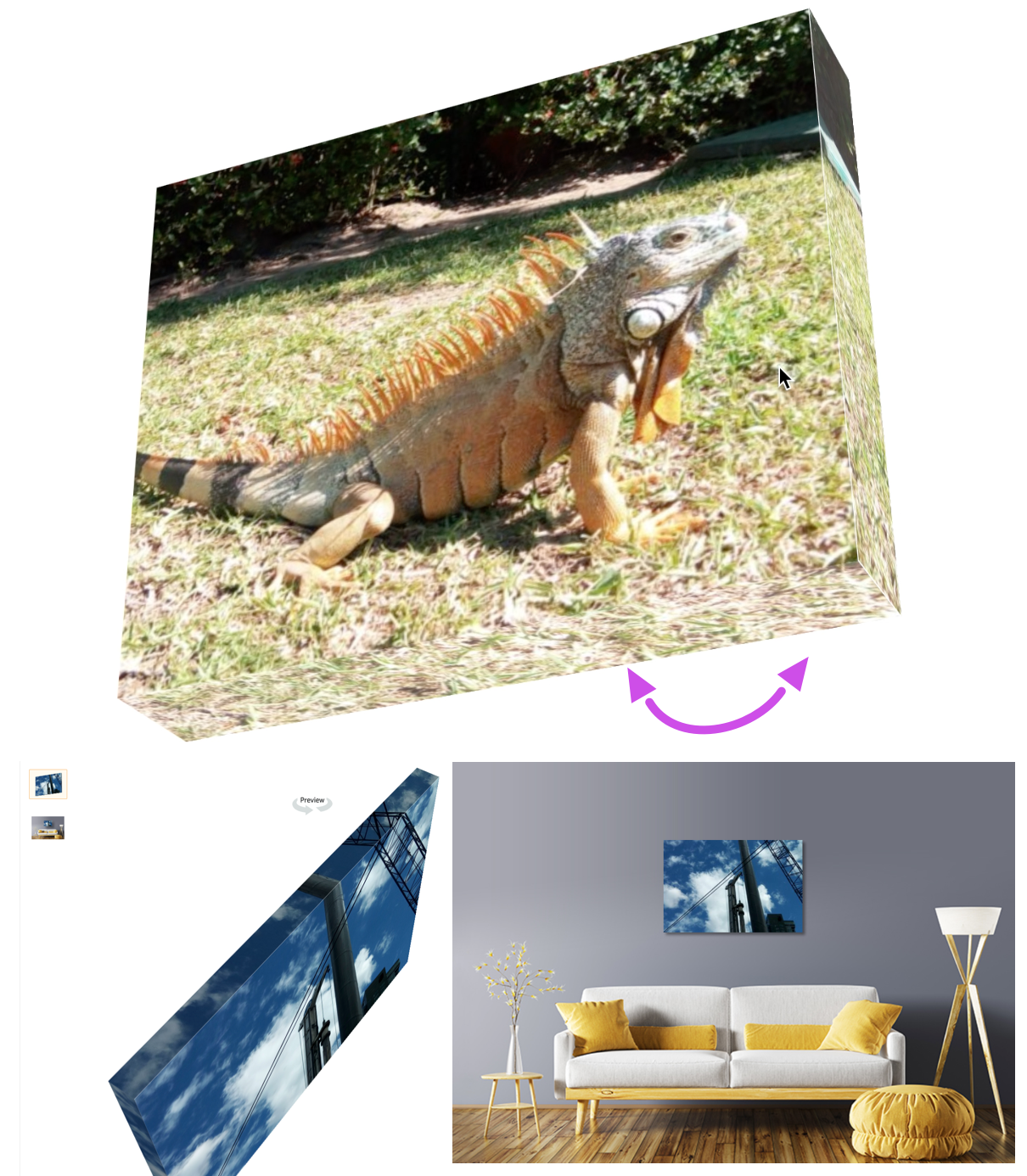

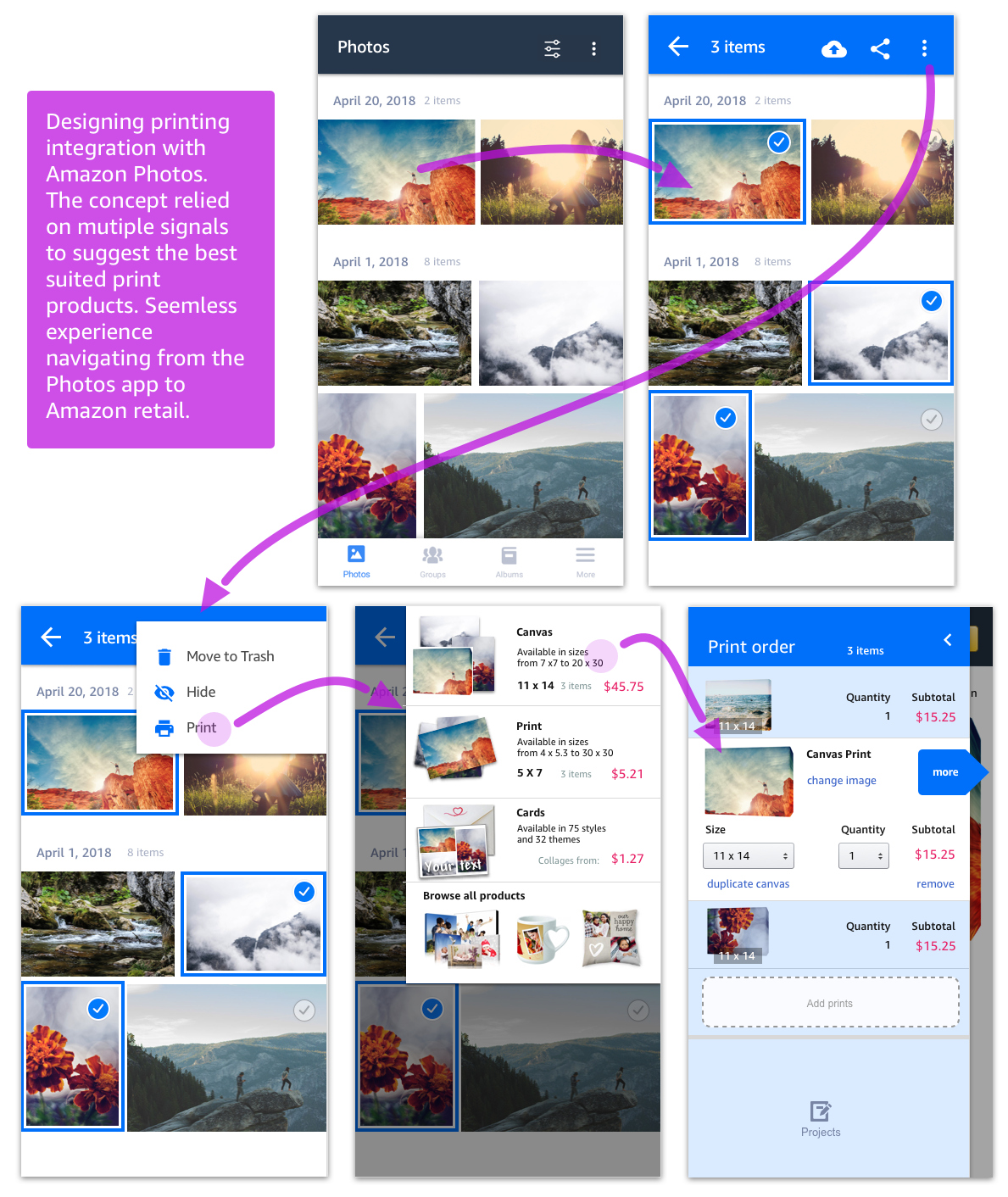

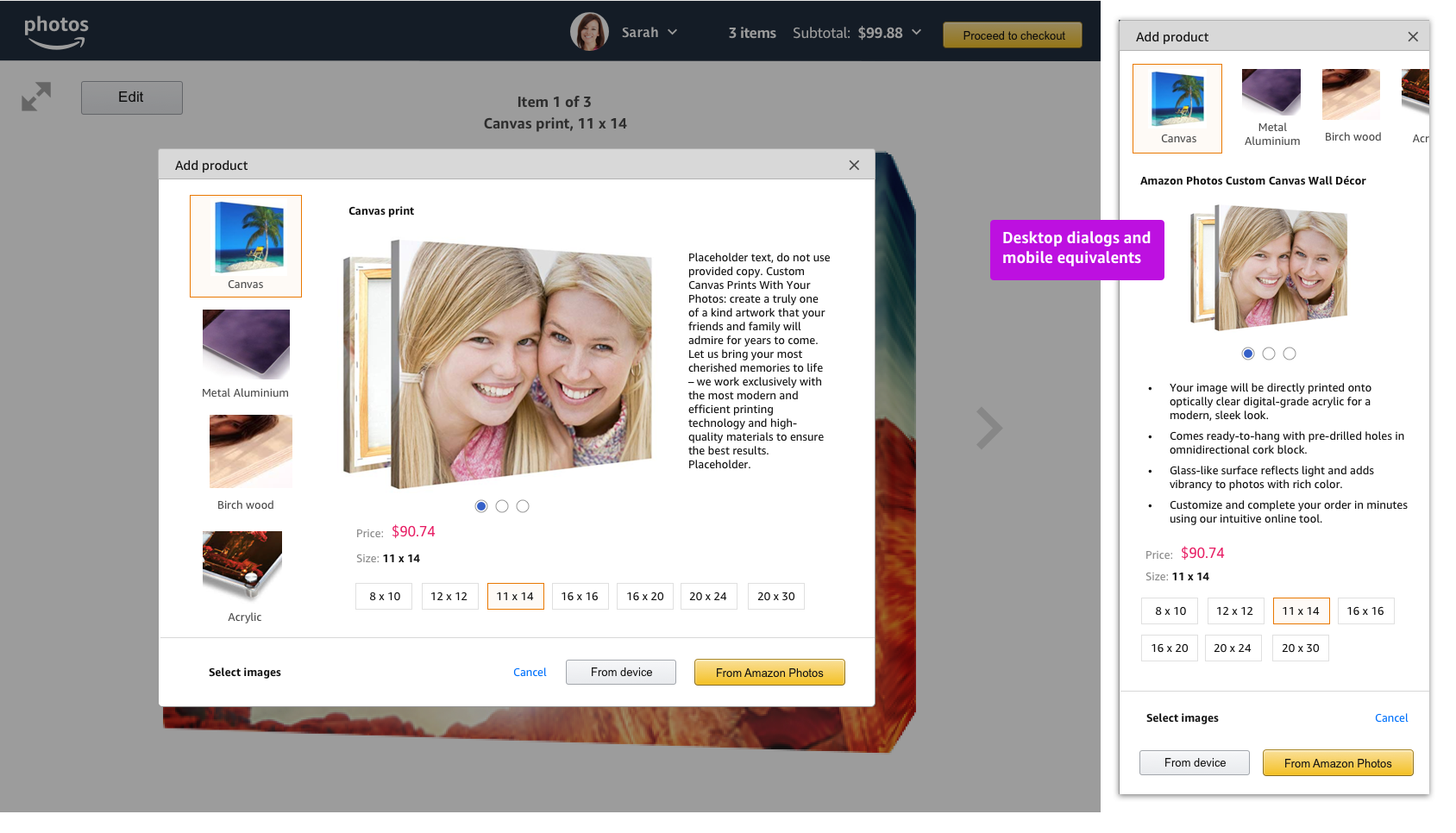

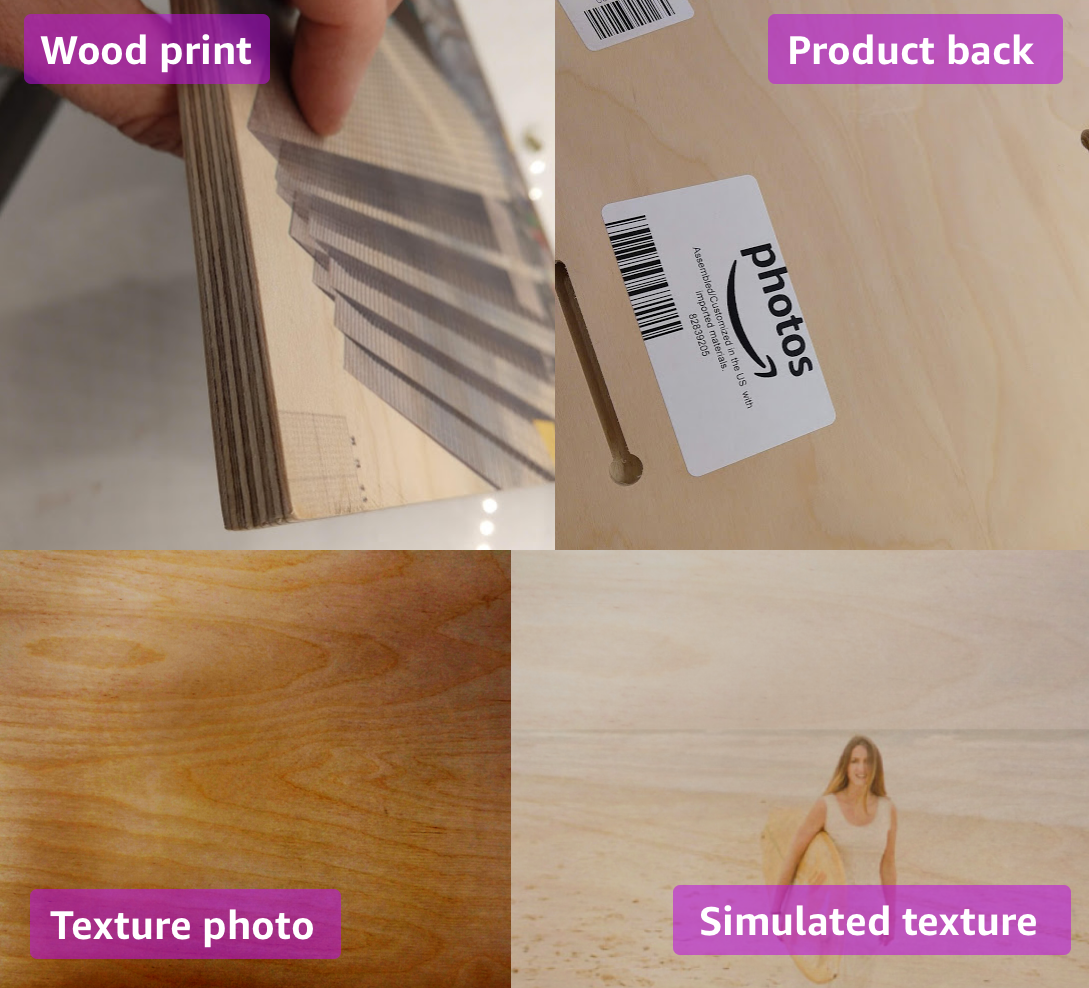

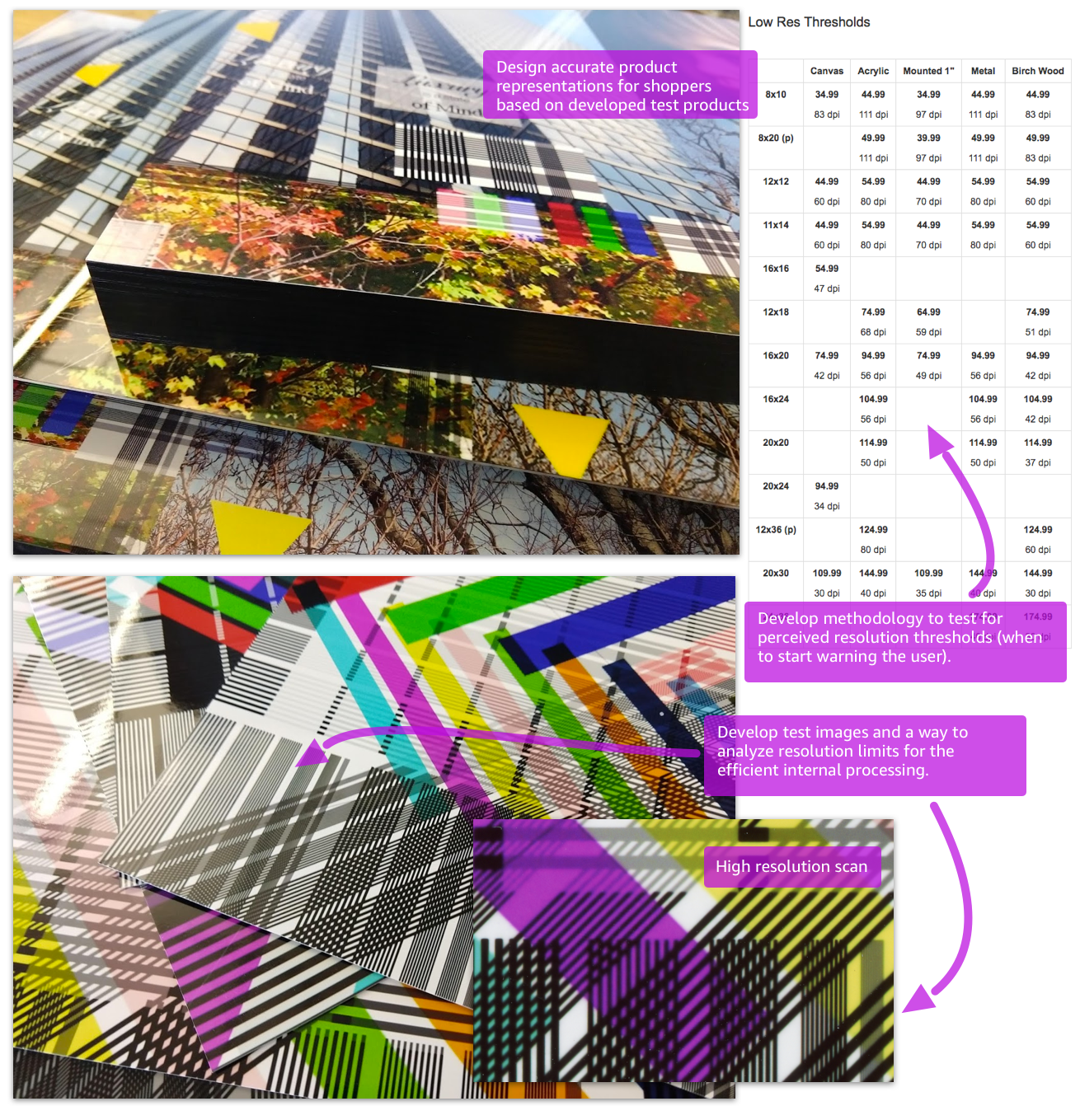

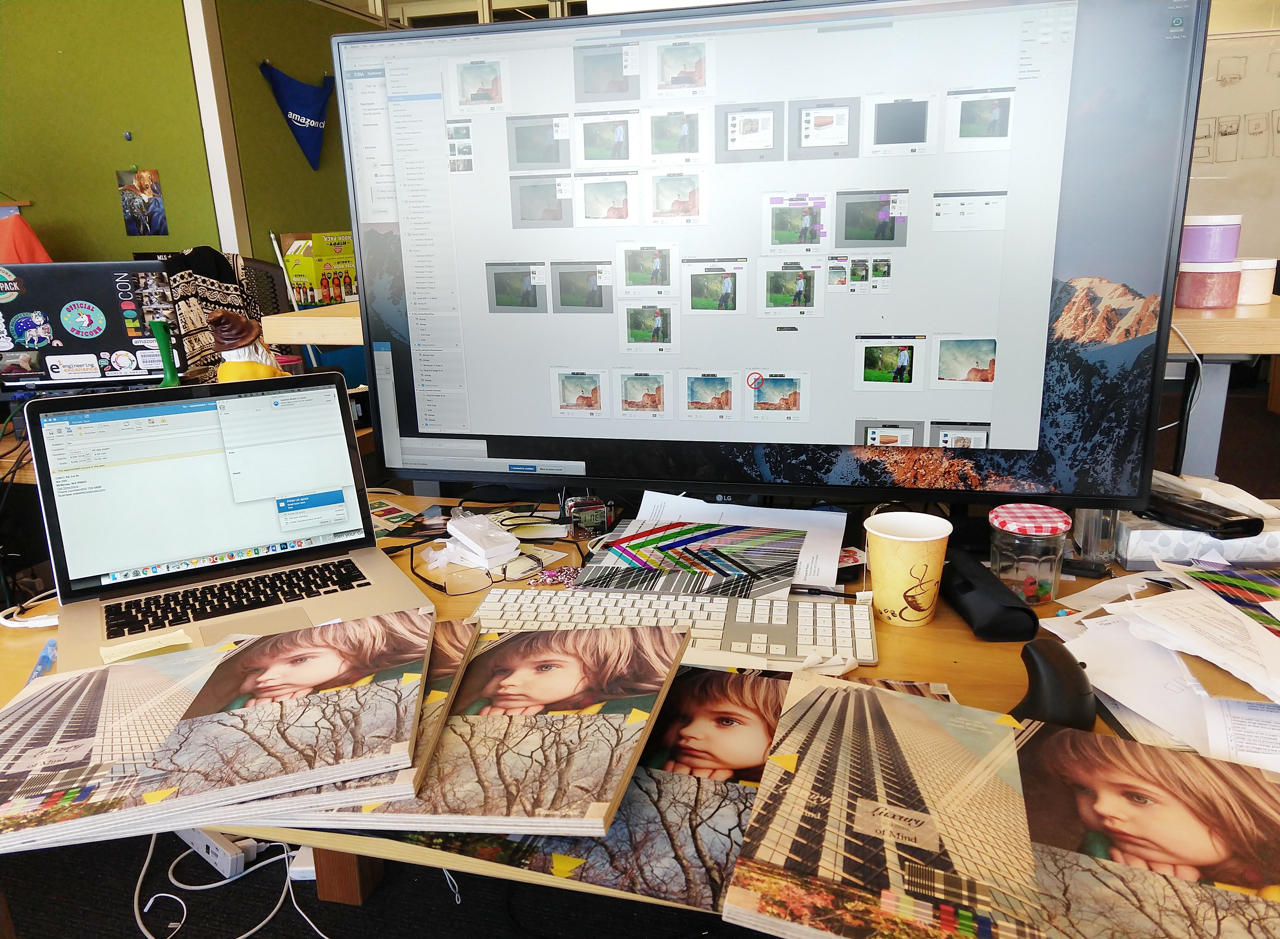

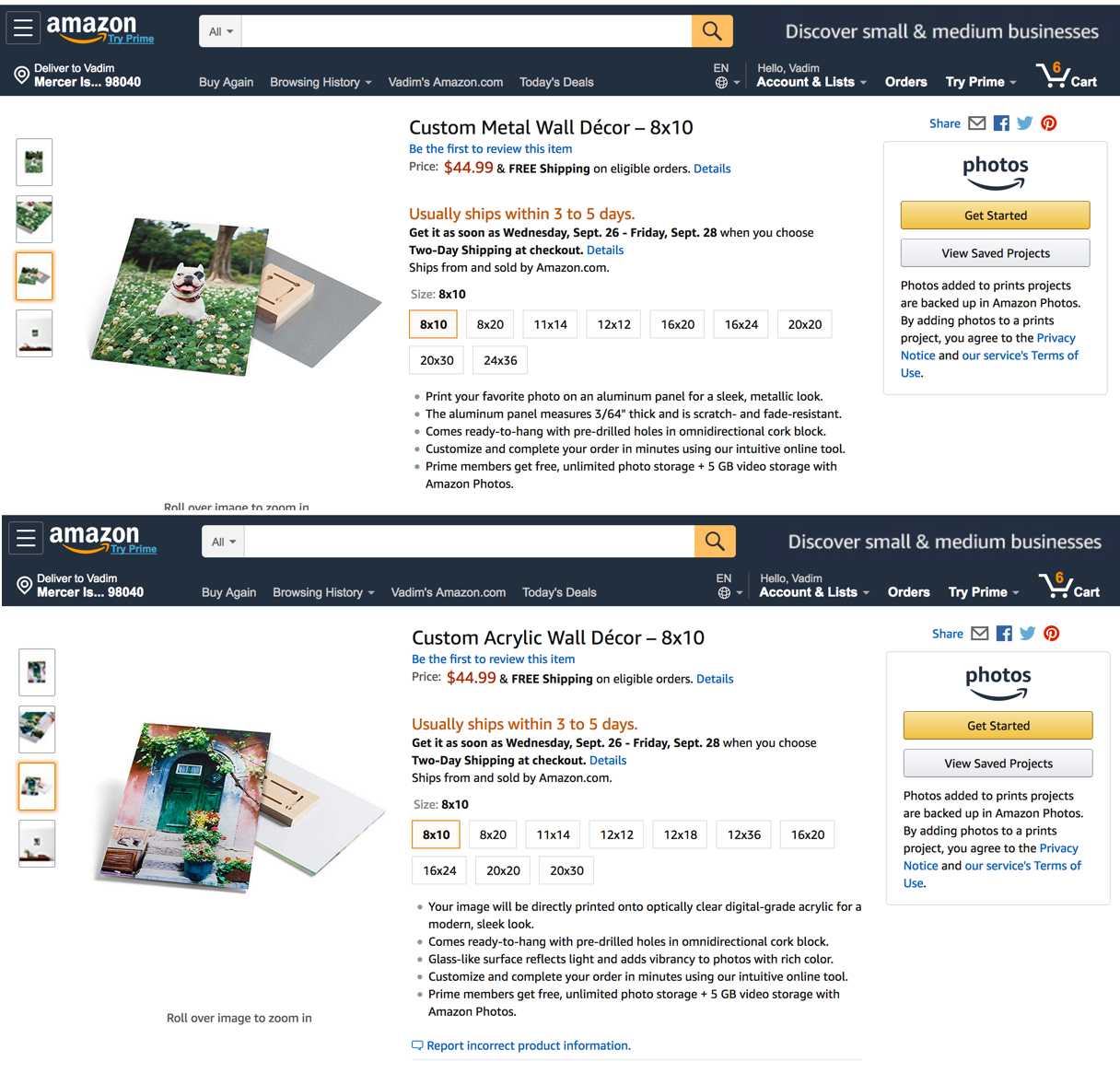

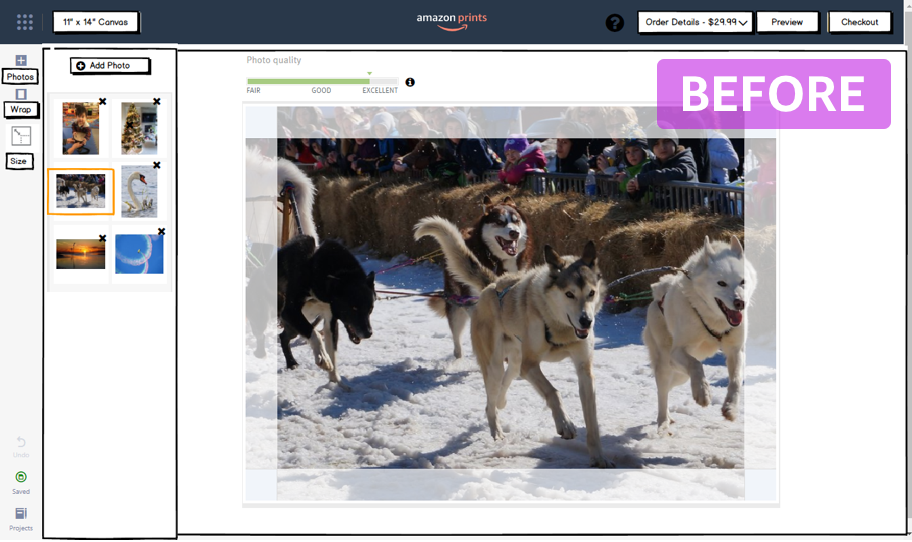

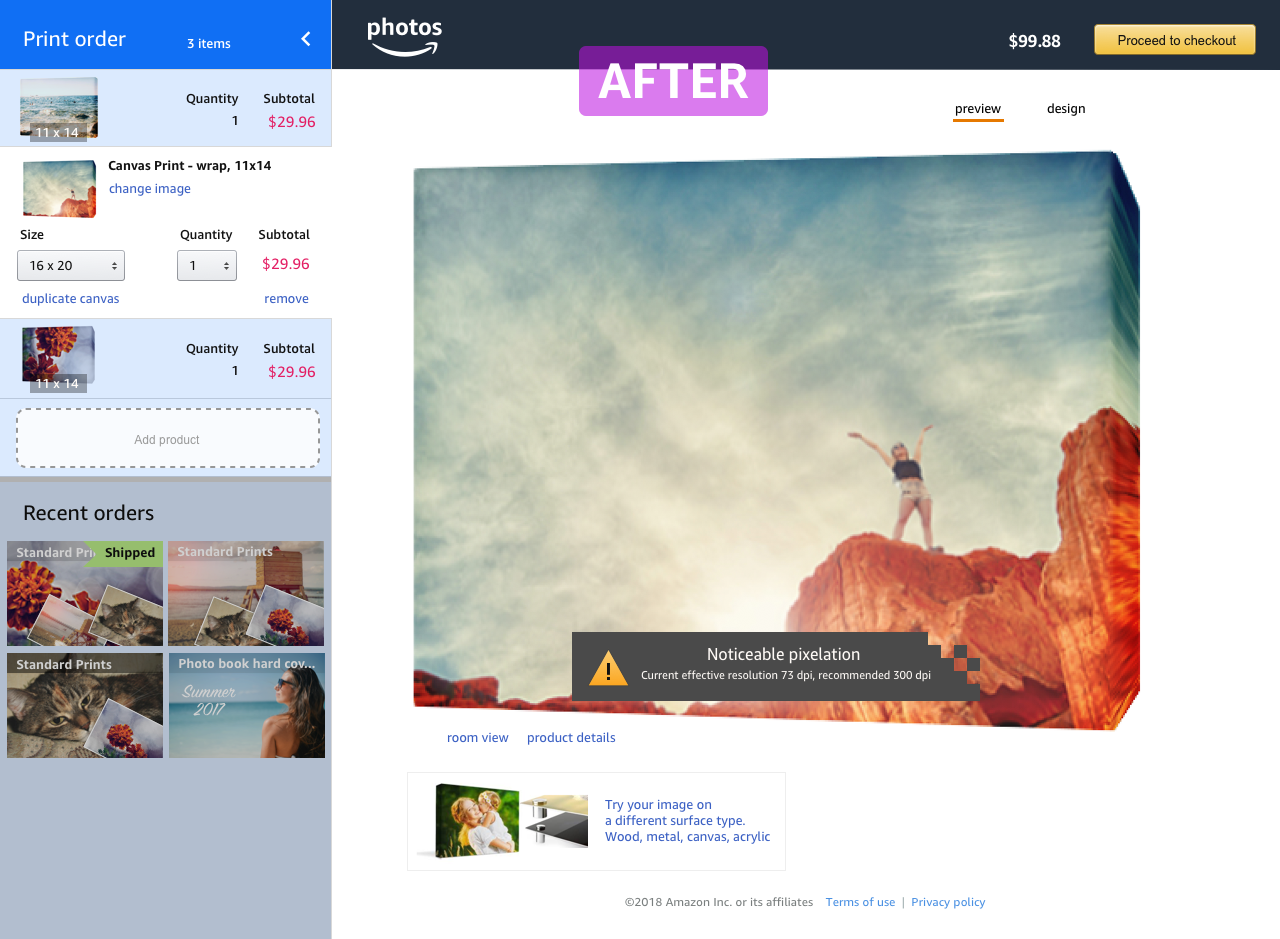

Amazon prints allows users to order regular paper photo prints or wall décor products (canvases, acrylic, wood, etc.) from within Amazon and have the products shipped to the customer. What seems like a simple problem of “just upload the images, pick the product and receive prints”, has many UX challenges, especially given the scale of Amazon. I joined the team to create the new user experience for intuitive ordering and editing wall décor products and in the process ended up redesigning most of the existing prints experience. Doing a good design is not simply enabling the functionality, but a combination of understanding the users and business and exercising a careful attention to details in crafting the details of the experience. Below are some examples of the details that went into the design.